Criteria for Fully Automated Provisioning

Damon Edwards /

“Done” is one of those interesting words. Everyone knows what it means in the abstract sense. However, look at how much effort has to go into getting developers to agree that done really does mean 100% done (no testing, docs, formatting, acceptance, etc. left to do).

“Fully” is similarly an interesting word. I can’t tell you how many times I’ve encountered a a situation where someone says that they’ve “fully automated” their deployments. Then when they walk me through the steps involved with a typical deployment it’s full of just-in-time hand-editing of scripts, copying and pasting, fetching of artifacts, manual “finishing” or “verification” steps, and things of that nature. Even worse, if you ask two different people to walk you through the same process you might get two completely different versions of “fully” definitely not meaning “fully”.

Just like Agile developers use the mantra “done means done“. Operations needs the mantra “fully automated means fully automated“. Without a clear definition of what “fully automated” means, it’s going to be difficult to come up with any kind of consensus around solutions.

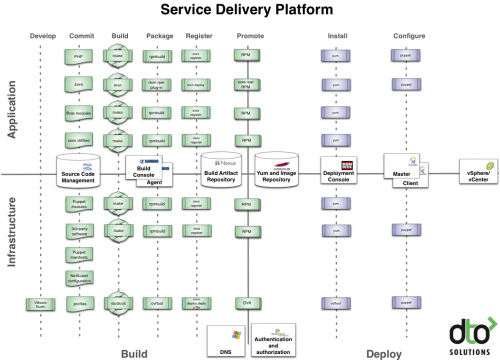

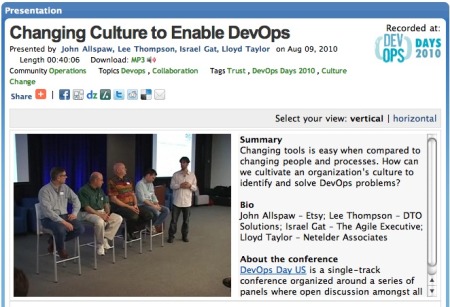

As part of the original “Web Ops 2.0: Achieving Fully Automated Provisioning” whitepaper, we listed a criteria for “Fully Automated Provisioning”. I’ve taken that content and posted to the new DevOps Toolchain project. Hopefully it will spur some discussion on what “fully automated” actually means.

Here’s the initial list of criteria:

1. Be able to automatically provision an entire environment — from “bare-metal” to running business services — completely from specification

Starting with bare metal (or stock virtual machine images), can you provide a specification to your provisioning tools and the tools will in turn automatically deploy, configure, and startup your entire system and application stack? This means not leaving runtime decisions or “hand-tweaking” for the operator. The specification may vary from release to release or be broken down into individual parts provided to specific tools, but the calls to the tools and the automation itself should not vary from release to release (barring a significant architectural change).

2. No direct management of individual boxes

This is as much a cultural change as it is a question of tooling. Access to individual machines for purposes other than diagnostics or performance analysis should be highly frowned upon and strictly controlled. All deployments, updates, and fixes must be deployed only through specification-driven provisioning tools that in turn manages each individual server to achieve the desired result.

3. Be able to revert to a “previously known good” state at any time

Many web operations lack the capability to rollback to a “previously known good” state. Once an upgrade process has begun, they are forced to push forward and firefight until they reach a functionally acceptable state. With fully automated provisioning you should be able to supply your provisioning system with a previously known good specification that will automatically return your applications to a functionally acceptable state. The most successful rollback strategy is what can be described as “rolling forward to a previous version”. Database issues are generally the primary complication with any rollback strategy, but it is rare to find a situation where a workable strategy can’t be achieved.

4. It’s easier to re-provision than it is to repair

This is a litmus test. If your automation is implemented correctly, you will find it is easier to re-provision your applications than it is to attempt to repair them in place. “Re-provisioning” could simply mean an automated cycle of validating and regenerating application and system configurations or it could mean a full provisioning cycle from the base OS up to running business applications.

5. Anyone on your team with minimal domain specific knowledge can deploy or update an environment

You don’t always want your most junior staff to be handling provisioning, but with a full automated provisioning system they should be able to do just that. Once your domain specific experts collaborate on the specification for that release, anyone familiar with a few basic commands (and having the correct security permissions) should be able to deploy that release to any integrated development, test, or production environment.

This is a good start!

-Jesse

I couldn’t agree more but I would add another criteria, one that may help this effort, make the service sharable – see my blog posting on the term Softservice Distro.

Thanks for your post

I'm doing these things for several year using the FAI (Fully Automatic Installation) software. For me it was also very important to emphasize the "Fully" in this software. Besides bare metal provisioning FAI can also update a configuration, we call it softupdate. And it's not only for Debian related Linux Distributions, it can also install and configure RPM based distribution. FAI was start in 1999 and has a lot of experiences in provisioning and roll out.

Have a look at it:

http://fai-project.org