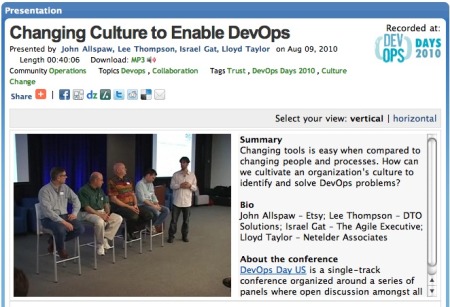

Q&A: Ernest Mueller on bringing Agile to operations

Damon Edwards /

“I say this as somebody who about 15 years ago chose system administration over development. But system administration and system administrators have allowed themselves to lag in maturity behind what the state of the art is. These new technologies are finally causing us to be held to account to modernize the way we do things. And I think that’s a welcome and healthy challenge.”

-Ernest Mueller

I met Ernest Mueller back at OpsCamp Austin and have been following his blog ever since. As a Web Systems Architect at National Instruments, Ernest has had some interesting experiences to share. Like so many of us, he’s been “trying to solve DevOps problems long before the word DevOps came around”!

Ernest was kind enough to submit himself to an interview for our readers. We talked at length about his experiences brining Agile principles to the operations side of a traditional large enterprise IT environment. Below are the highlights of that conversation. I hope you get as much out of it as I did.

Damon:

What are the circumstances are that led you down the path of bringing the Agile principles to your operations work?

Ernest:

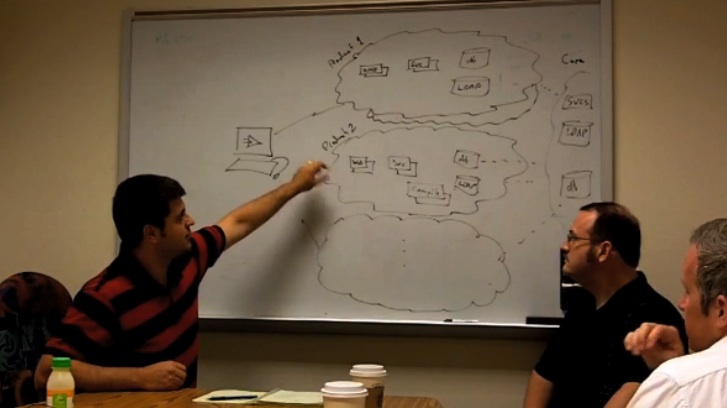

I’ve been at National Instruments for, oh, seven years now. Initially, I was working on and leading our Web systems team that handled the systems side of our Web site. And over time, it became clear that we needed to have more of a hand in the genesis of the programs that were going out onto the Web site, to put in those sorts of operational concerns, like reliability and performance.

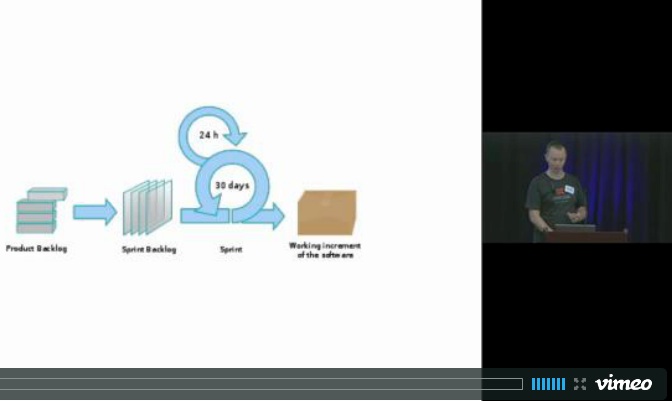

So we kind of turned into a team that was an advocate for that, and started working with the development teams pretty early in their lifecycle in order to make sure that performance and availability and security and all those concerns were being designed into the system as an overall whole. And as those teams started to move towards using Agile methodologies more and more, there started to become a little bit of a disjoint.

Prior to that when they had been using Waterfall, we aligned with them and we developed what we called the Systems Development Framework, which was kind of systems equivalent of a software development lifecycle to help the developers understand what it is we needed to do along with them. And so we got to a point where it seemed like that was going very well. And then Agile started coming in and it started throwing us off a little more.

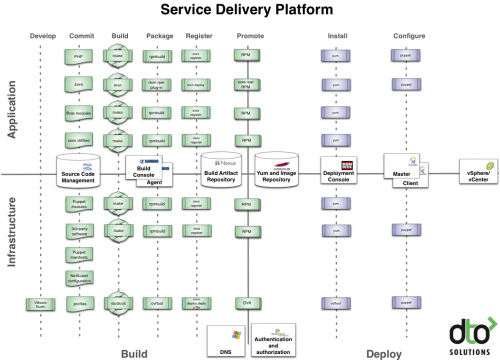

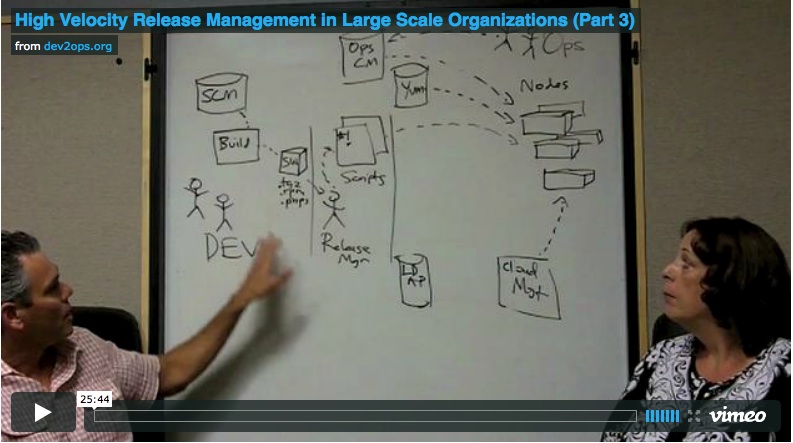

And the infrastructure teams – ours and others – more and more started to become the bottleneck to getting systems out the door. Some of that was natural because we have 40 developers and a team of five systems engineers, right? But some of that was because, overall, the infrastructure teams’ cadence was built around very long-term Waterfall.

As technologies like virtualization and Cloud Computing started to make themselves available, we started to see more why that was because once you’re able to provision your machines in that sort of way, a huge – a huge long pole that was usually on the critical path of any project started falling out because, best case, if you wanted a server procured and built and given to you, you’re talking six weeks lead time.

And so, to an extent there was always – I hate to say an excuse, but a somewhat meaningful reason for not being able to operate on that same sort of quick cycle cadence that Agile Development works along. So once those technologies started coming down and we started to see, “Hey, we actually can start doing that,” we started to try it out and saw the benefits of working with the developers, quote, “their way” along the same cadence.

Damon:

When you first heard about these Agile ideas – that developers were moving towards these short sprints and iterative cycles – were you skeptical or concerned that there was going be a mismatch? What were your doubts?

Ernest:

Absolutely, it was very concerning because when folks started uptaking Agile there was less upfront planning, design and architecture being done. So things that they needed out of the systems team that did require a large amount of lead time wouldn’t get done appropriately. They often didn’t figure out until their final iteration that, “Oh, we need some sort of major systems change.” We would always get into these crunches where they decided, “Oh, we need a Jabber server,” and they decided it two weeks before they’re supposed to go into test with their final version. It was an unpleasant experience for us because we felt like we had built up this process that had got us up very well aligned from just a relationship point of view between development and operations with the previous model. And this was coming in and “messing that all up.”

Initially there were just infrastructure realities that meant you couldn’t keep pace with that. Or, well, “couldn’t” is a strong word. Historically, automation has always been an afterthought for infrastructure people. You build everything and you get it running. And then, if you can, go back and retrofit automation onto it, in your copious spare time. Unless you’re faced with some sort of huge thousand server scale deal because you’re working for one of the massive shops.

But everyplace else where you’re dealing with 5, 10, 20 servers at a time, it was always seen as a luxury, to an extent because of the historical lack of automation and tools but also to an extent just because you know purchasing and putting in hardware and stuff like that has a long time lag associated with it. We initially weren’t able to keep up with the Agile iterations and not only the projects, but the relationships among the teams suffered somewhat.

Even once we started to try to get on the agile path, it was very foreign to the other infrastructure teams; even things like using revision control, creating tests for your own systems, and similar were considered “apps things” and were odd and unfamiliar.

Damon:

So how did you approach and overcome that skepticism and unfamiliarity that the rest of the team had toward becoming more Agile?

Ernest:

There’s two ways. One was evangelism. The other way – I mean I [laughter] hesitate to trumpet this is the right way. But mostly it was to spin off a new team dedicated to the concept, and use the technologies like Cloud and virtualization to take other teams out of the loop when we could.

These new products that we’re working on right now, we’ve, essentially, made the strategic decision that since we’re using Cloud Computing, that all the “hardware procurement and system administration stuff” is something that we can do in a smaller team on a product-by-product basis without relying on the traditional organization until they’re able to move themselves to where they can take advantage of some of these new concepts.

And they’re working on that. There’s people, internally, eyeballing ITIL and, recently, a bunch of us bought the Visible Ops book and are having a little book club on it to kinda get people moving along that path. But we had to incubate, essentially, a smaller effort that’s a schism from the traditional organization in order to really implement it.

Damon:

You’ve mentioned Agile, ITIL, and Visible Ops. How do you see those ideas aligning? Are they compatible?

Ernest:

I know some people decry ITIL and see process as a hindrance to agility instead of an asset. I think it’s a problem very similar to that which development teams have faced. We actually just went through one of those Microsoft Application Lifecycle Management Assessments, where they talk with all the developers about the build processes and all of that. And it ends up being the same kind of discussion. So things like using version control and having coordinated builds and all this. They are all a hindrance to a single person’s velocity, right? Why do it if I don’t need it?

You know if I’m just so cool that I never need revision control for whatever reason, [laughter] then having it doesn’t benefit me specifically. But I think developers have gotten more used to the fact that, “Hey, having these things actually does help us in the long term, from the group level, come out with a better product.”

And operations folks for a long time have seen the value of process because they value the stability. They’re the ones that get dinged on audits and things like that, so operation folks have seen the benefits of process, in general. So when they talk about ITIL, there’s occasional people that grump about, “Well, that will just be more slow process,” but realistically, they’re all into process. [laughter] It all just depends what sort and how mature it is.

How to bridge ITIL to Agile [pause] — What the Visible Ops book has tried to do in terms of cutting ITIL down to priorities — What are the most important things that you need to do? Do those first, and do more of those. And what are the should do’s after that? What are the nice to haves after that? A lot of times, in operations, we can map out this huge map of every safeguard that any system we’ve worked on has ever had. And we count that as being the blueprint for the next successful system. But that’s not realistic.

It’s similar to when you develop features. You have to be somewhat ruthless about prioritizing what’s really important and deprioritizing the things that aren’t important so that you can deliver on time. And if you end up having enough time and effort to do all that stuff, that’s great.

But if you work under the iterative model and focus first on the important stuff and finish it, and then on the secondary stuff and finish it, and then on the tertiary stuff, then you get the same benefit the developers are getting out of Agile, which is the ability to put a fork in something and call it done, based on time constraints and knowing that you’ve done the best that you can with that time.

Damon:

You mentioned the idea of bringing testing to operations and how that’s a bit of a culture shift away from how operations traditionally worked. How did you overcome that? What was the path you took to improve testing in operations?

Ernest:

The first time where it really made itself clear to me was during a project where we were conducting a core network upgrade on our Austin campus and there were a lot of changes going along with that. I got tapped to project manage the release.

We had this long and complex plan where the network people would bring the network up, and then the storage team would bring the storage up, and the Unix administrators would bring all the core Unix servers up. It became clear to me that nobody was actually planning on doing any verification of whether their things were working right.

We’d done some dry runs and, for example, the Unix admins would boot all their servers and wander off, and half of their NFS mounts would hang. And they would say, “Well, I’m sure, three hours later, once the applications start running, the developers testing those will see problems because of it, and then I’ll find out that my NFS mounts are hanging, right?” [laughter].

And always being eager to not disrupt the people up the chain, if at all possible, that answer aggrieved me. I started talking to them about it and that’s when it first became clear to me that the same unit test and integration test concerns are equally as applicable to infrastructure folks as application folks. For that release, we quickly implemented a bunch of tests to give us some kind of idea what the state of the systems were – ping sweeps from all boxes to all boxes to determine if systems or subnets can’t see each other, NFS mount check scripts distributed via cfengine to verify that. The resulting release process and tests has been reused for every network release since because of how well and quickly it detects problems.

It’s difficult when there’s not a – or at least we didn’t know of anybody else who really had done that. You know if you go out there and Google, “What’s a infrastructure unit test look like,” you don’t get a lot of answers. [Laughter]. So we were, to an extent, experimenting trying to figure out, well, what is a meaningful unit test? If I build a Tomcat server, what does it mean to have a unit test that I can execute on that even before there’s “business applications” applied to it?

I would say we’re still in the process of figuring that out. We’re trying to build our architecture so that there are places for those unit tests, ideally, both when we build servers, but we’d like them to be a reasonable part of ongoing monitoring as well.

Damon:

How are you writing those “unit tests for operations”? Are they automated? If so, are you using scripts or some sort of test harness or monitoring tool?

Ernest:

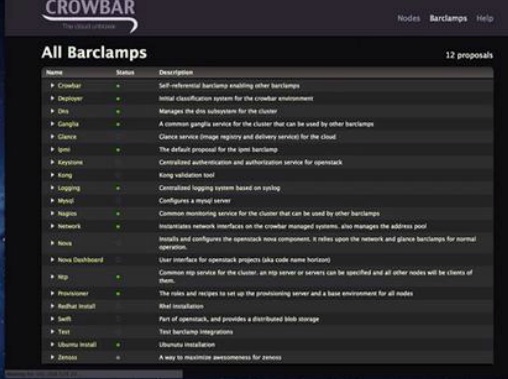

So right now the tests are only scripted. We would be very interested in figuring out if there’s a test harness that we could use that would allow us to do that. You can kind of retrofit existing monitoring tools, like a Nagios or whatever to run scripts and kinda call it a test harness. But of course it’s not really purpose-built for that.

Damon:

Any tips for people just starting down the DevOps or Agile Operations path?

Ernest:

Well, I would say the first thing is try to understand what it is the developers do and understand why they do it. Not just because they’re your customer, but because a lot of those development best practices are things you need to be doing too. The second thing I would say is try to find a small prototype or skunkworks project where you can implement those things to prove it out.

It’s nearly impossible to get an entire IT department to suddenly change over to a new methodology just because they all see a PowerPoint presentation and think it’s gonna be better, right? That’s just not the way the world works. But you can take a separate initiative and try to run it according to those principles, and let people see the difference that it makes. And then expand it back out from there. I think that’s the only way that we’re finding that we could be successful at it here.

Damon:

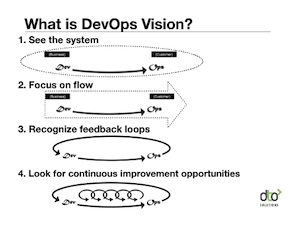

Why is DevOps and Agile Operations becoming a hot topic now?

Ernest:

I would say is that I believe that one of the reasons that this is becoming a much more pervasively understood topic is virtualization and Cloud Computing. Now that provisioning can happen much more quickly on the infrastructure side, it’s serving as a wake-up call and people are saying, “Well, why isn’t it?”

When we implemented virtualization, we got a big VMware farm put in. And one of the things that I had hoped was what that six-week lead time of me getting a server was gonna go down because of course, “in VMware you just click and you make yourself a new one,” right? Well, the reality was it would still put in a request for a new server, and it would still have to go through procurement because, you know, somebody needed to buy the Red Hat license or whatever.

And then you’d file a request, and the VMware team would get around to provisioning the VM, and then you’d file another request and the Unix or the Windows administration team would get around to provisioning an OS on it. And it still took about a month, right, for something that when the sales guys do the VMWare demo, takes 15 minutes. And at that point, because there wasn’t the kind of excuse of ”we had to buy hardware” left, it became a lot more clear that no, the problem is our processes. The problem is that we’re not valuing Agility over a lot of these other things.

And in general, we infrastructure teams specifically organized ourselves almost to be antithetical to agility. It’s all about reliability and cost efficiency, which are also laudable goals, but you can’t sacrifice agility at their altar (and don’t have to). And I think that’s what a lot of people are starting to see. They get this new technology in their hand, and they’re like, “Oh, okay, if I’m really gonna dynamically scale servers, I can’t do automation later. I can’t do things the manual way and then, eventually, get around to doing it the right way. I have to consider doing it the right way out of the gate”.

I say this as somebody who about 15 years ago chose system administration over development. But system administration and system administrators have allowed themselves to lag in maturity behind what the state of the art is. These new technologies are finally causing us to be held to account to modernize the way we do things. And I think that’s a welcome and healthy challenge.