The idea of the “forklift” upgrade/replacement is an attractive option for high volume hardware environments. The idea of adding or moving things as one pre-configured mass is compelling, but shouldn’t be applied overzealously to other domains… especially application deployment.

I remember when word first got out that Google just tossed servers in the trash on the first sign of failure. Then it was news when it leaked out that Google had pre-configured units of infrastructure – servers, switches, racks, etc. – that they just “popped” into place when new capacity was needed. Many found this new paradigm shocking, but it did shine an early light on the forklift concept.

Jump forward to today and the forklift idea has matured even further. Sun is now shipping the Modular Datacenter (formerly known by its sexier name, Project Blackbox), a cargo container with an entire pre-configured datacenter inside. Add power, connectivity, and water (for cooling) and the thing hums. Need more capacity? The truck arrives with another unit.

But the forklift paradigm isn’t limited to just hardware these days. With the rise of virtualization, these same concepts are being applied to the software domain. Check out this recent press release from VMware. They are advocating the forklift model for production deployment: tinker with an image until you like it, snapshot it, copy it a bunch of times, and then move the whole lot of server images in masse to the production environment. It sounds like a reasonable theory, but in practice this often leads to more problems than it solves.

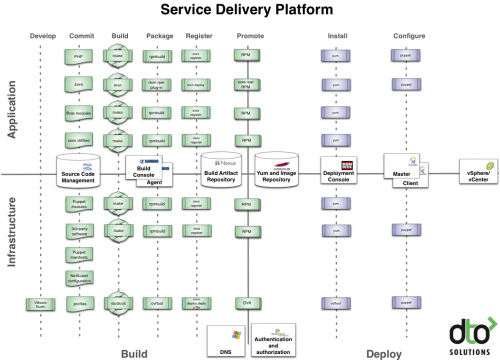

“Snapshot and copy” (the forklift of the software world) works well for OS provisioning. But when it comes to application provisioning, it’s just not so simple. The key to any deployment process is the configuration and coordination of actions across software components (which naturally means across OS instances, physical or virtual). This has to be done even if you’ve utilized the snapshot and copy approach. This means you are still writing your own complex scripts or doing a lot of actions by hand.

I’ve seen several situations lately where the promise of virtualization and it’s snaphot and copy paradigm has been embraced by an organization but in time they’ve only found themselves with a more complex and difficult environment to manage. What worked well in the small scale eventually ends up creating the Service Monolith hairball that Alex was discussing in his previous post. The primary culprit? The idea that the snapshot and copy paradigm replaces the need for tooling that allows you to build any OS or integrated application from a specification driven, fully automated process.

Don’t get me wrong, I’m a big fan of virtualization. We use it extensively ourselves and build it into solutions for our clients. It solves a lot of problems, just not all problems. So remember, when designing you application deployment process bring the rest of your tools along with your forklift.