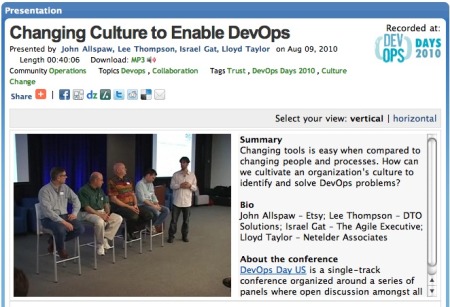

I was honored to be asked to speak at DevOps Days in Manila and just got off stage. I was blown away when I found out over 400 people signed up to attend. Speaking gives me a chance to unload a bunch of baggage I’ve been carrying around years.

We all bring a lot of baggage with us into a job. The older you are, the more you bring. The first part of my career I did 10 years of real-time industrial control software design, implementation, and integration way way back before the web 1.0 days. Yes, I wrote the software for the furniture Homer Simpson sat in front of at the nuclear plant that was all sticky with donut crumbs…

I took that manufacturing background baggage to E*TRADE in ’96 where I ran into fellow dev2ops contributor Alex Honor who brought his Aimes Research Laboratory baggage of (at the time) massive compute infrastructure and mobile agents. We used to drink a bunch of coffee and try to figure out how this whole internet e-commerce thing needed to be put together. We’d get up crazy early at 4:30AM, listen to Miles, and watch the booming online world wake up and trade stocks and by 9:00AM have a game plan formulated to make it better.

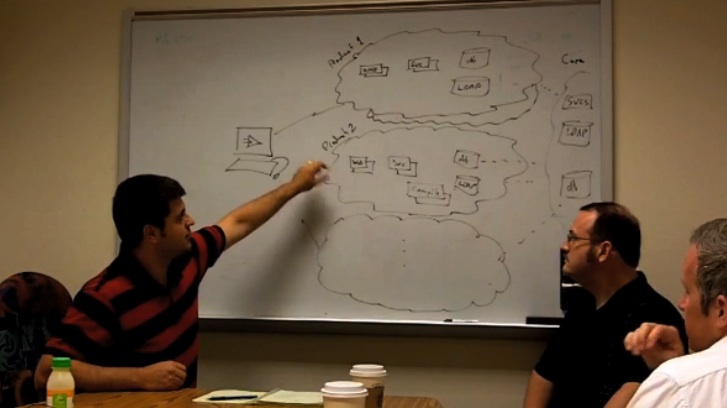

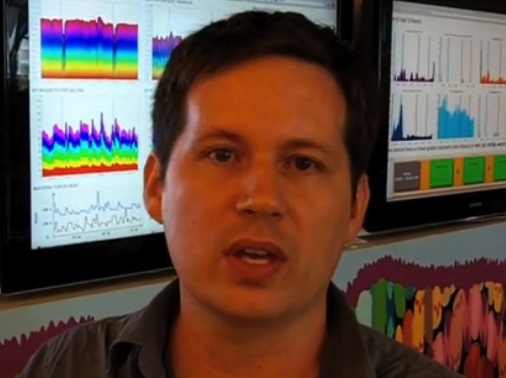

My manufacturing background was always kicking in at those times looking for control points. Webserver hits per second, firewall MBits/sec, Auth success or fail per second, trades per second, quotes per second, service queue depths, and the dreaded position request response time. I was quite sure there was a correlation algorithm between these phenomena and I could figure it out if I had a few weeks that I didn’t have. I also knew that once I figured it out, the underlying hardware, software, network, and user demand would change radically throwing my math off. Controlling physical phenomena like oil, paper, and pharmaceutical products followed the math of physics. We didn’t have the math to predict operating system thread/process starvation and it took us years to figure out OS context switches per second has a huge kernel scaleability issue not often measured or written about.

One particularly busy morning in late ’96 Alex was watching our webserver, pointed at a measurement on the screen and said, “I think we’re gonna need another webserver”. With that, we also needed to figure out how to loadbalance webservers. As usual for the era, two webservers was a massive understatement. Within a year, there was more compute infrastructure at E*TRADE supporting the HTTPS web pages then the rest of the trading system and the trading system had been in place for 12 years by this time… Analytics of measurements (accompanied by jazz music) became an important part of our decision making.

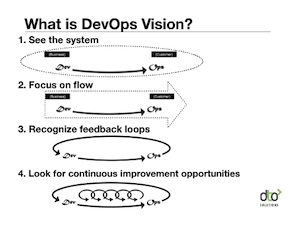

Alex and I were also convinced in early ’97 that sound manufacturing principles used in the physical world made a ton of sense to apply to virtual online world of the internet. I’m still surprised the big control systems vendors like Honeywell and Emerson haven’t gotten into data center control. No matter, the DevOps community can make progress on it as its so complimentary to DevOps goals and its what the devops-toolchain project is all about.

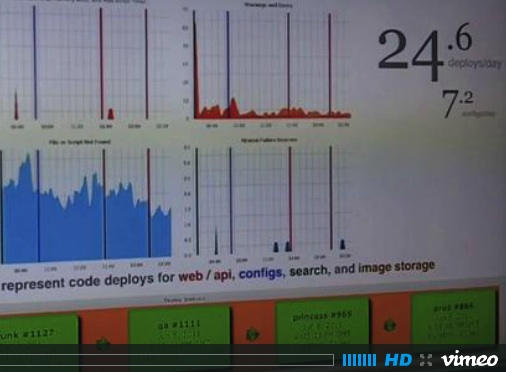

Get a bunch of DevOps folks together and the topic of monitoring comes up every time. I always have to ask “Are you happy with it?” and the answer is always “no” (though I don’t think anyone at Etsy was there). When you drill into what’s wrong with their monitoring, you may find that most companies have plenty of monitoring, what they don’t have is control.

Say your app in production runs 100 logins/sec and you are getting nominally 3 username/password failures a second. While the load may go up and down, you learn that that the 3% ratio is nominal and in control. If the ratio increments higher, that may be emblematic of a script kiddie running a dictionary attack or the password hash database is offline or a application change making it harder for users to properly input their credentials. If it drops down, that may indicate a professional psyber criminal is running an automated attack and getting through the wire. Truman may or may not of said “if you want a new idea, read an old book”. In this case, you should be reading about “Statistical Process Control” or SPC. It was heavily used during WWII. With our login example, the ratio of success to failed login attempts would be “Control Charted” and the control chart would evaluate weather the control point was “in control” or “out of control” based on defined criteria like standard deviation thresholds.

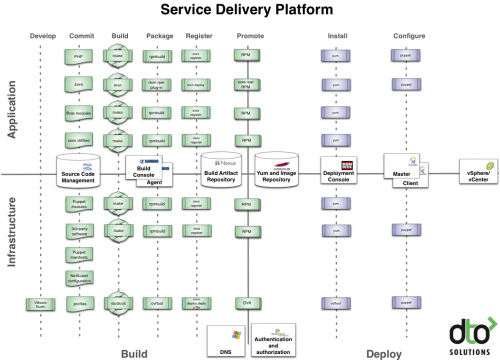

Measurement itself is a very low level construct providing the raw material for the control goal. You have to go through several more toolchain layers before you get to the automation you are looking for. We hit upon this concept in our talk at Velocity in 2010…

Manufacturing has come a long long way since WWII. Toyota built significantly on SPC methodologies that eventually became the development of “Lean Manufacturing”; a big part of the reason Toyota became the worlds largest automobile manufacturer in 2008. A key part of lean is Value Stream Mapping which is “used to analyze and design the flow of materials and information required to bring a product or service to a consumer” (wikipedia).

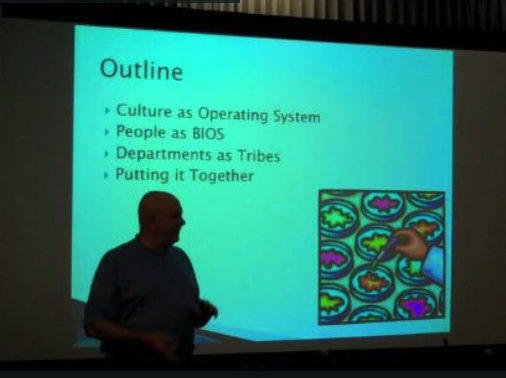

Value Stream Mapping a typical online business through marketing, product, development, qa, and operations flows minimally will help effectively communicate rolls, responsibilities, and work flows through your org. More typically it becomes a tool to get to a “future state” which has eliminated waste and increase effectiveness of the org, even when nothing physical was “manufactured”. I find agile development, devops, and continuous deployment goals all support lean manufacturing thinking. My personal take is that ITIL has similar goals, but is more of process over people approach instead of a people over process approach and it’s utility will be dependent on the organizations management structure and culture. I prefer people over process, but I do reference ITIL every time I find a rough or wasteful organizational process for ideas on recommending a future state.

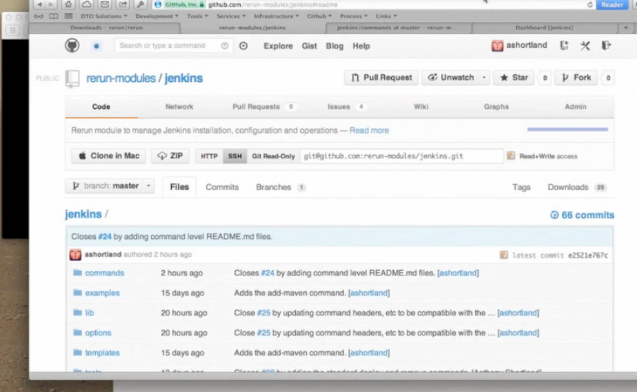

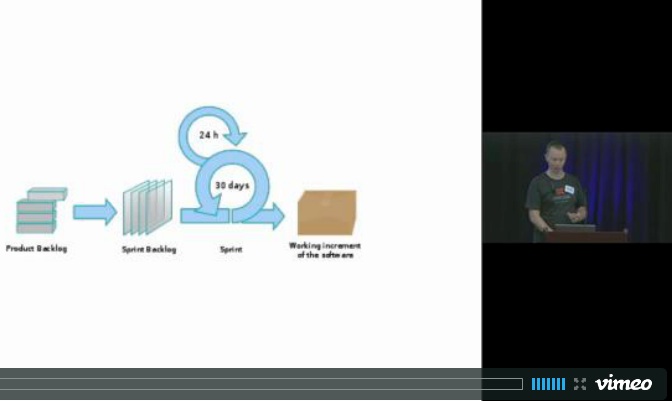

I was lucky enough to catch up with Alex, Anthony, and Damon over dinner and we were talking big about DevOps and Lean. Anthony mentioned that “we use value stream mapping in all of our DevOps engagements to make sure we are solving the right problem”. That really floored me on a few levels. First off, it takes Alex’s DevOps Design Patterns and DevOps Anti-Patterns to the next level similar to SPC to Lean adding a formalism to the DevOps implementation approach. It also adds a self correcting aspect to a companies investment into DevOps optimizations. I’ve spoken with many companies who made huge investments in converting to Agile development without any measurable uptick in product deployment rates. While these orgs haven’t reverted back to a waterfall approach as they like the iterative and collaborative approach, they hit the DevOps gap head on.

“We use Value-Stream Mapping in all of our DevOps engagements to make sure we are solving the right problem”

-Anthony Shortland (DTO Solutions)

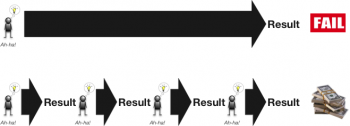

Practicers of Lean Manufacturing see this all the time. Eliminating one bottleneck just flows downstream to the next bottleneck. To expect greater production rates, you have to look at the value stream in its entirety. If developers were producing motors instead of software functions, a value stream manager would see huge inventory build up of the motors which produce no value to the customer and identify the overproduction as waste. Development is a big part of the value stream and making that more efficient is a really good idea. But a measurement of the release backlog growing is seldom measured or managed. If you treat your business as a Digital Information Manufacturing plant and manage it appropriately to that goal, you can avoid the frequent mistake Anthony and other Lean practitioners are talking about where you solve a huge problem without benefiting the business or the customer.

To sum up, DevOps inspired technology can learn quite a bit from Lean Manufacturing and Value Stream Mapping. This DevOps stuff is really hard and you’ll need to leverage as much as possible. Always remember that “Good programmers are lazy” and its good when you apply established tools and techniques. If you don’t think your working in a Digital Information Manufacturing plant, I bet your CEO does.