6 Months In: Fully Automated Provisioning Revisited

Damon Edwards /

It’s been about six months since I co-authored the “Web Ops 2.0: Achieving Fully Automated Provisioning” whitepaper along with the good folks at Reductive Labs (the team behind Puppet). While the paper was built on a case study about a joint user of ControlTier and Puppet (and a joint client of my employer, DTO Solutions, and Reductive Labs), the broader goal was to start a discussion around the concept of fully automated provisioning.

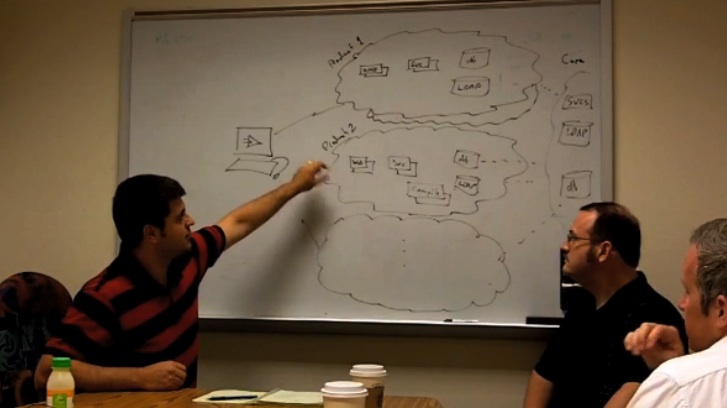

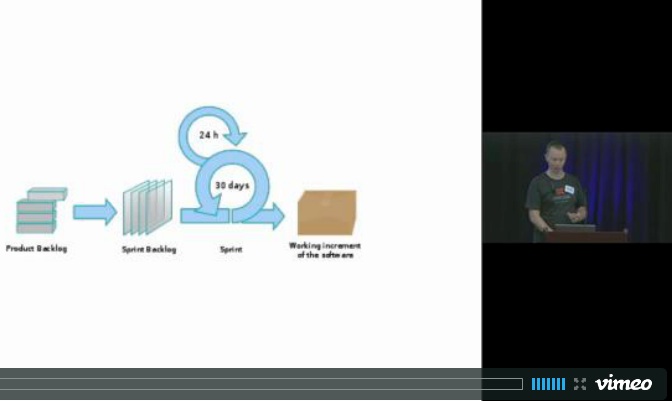

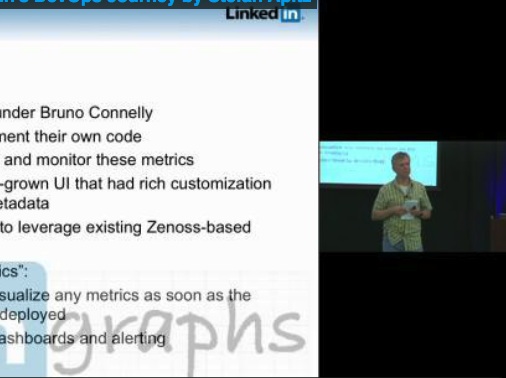

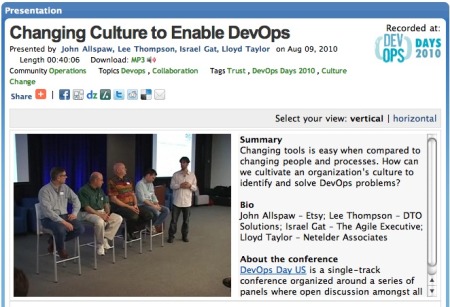

So far, so good. In addition to the feedback and lively discussion, we’ve just gotten word of the first independent presentation by a community member. Dan Nemec of Silverpop made a great presentation at AWSome Atlanta (a cloud computing technology focused meetup). John Willis was kind enough to record and post the video:

I’m currently working on an updated and expanded version of the whitepaper and am looking for any contributors who want to participate. Everything is being done under the Creative Commons (Attribution – Share Alike) license.

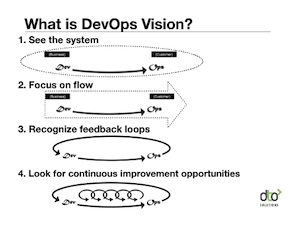

The core definition of “fully automated provisioning” hasn’t changed: the ability to deploy, update, and repair your application infrastructure using only pre-defined automated procedures.

Nor has the criteria for achieving fully automated provisioning:

- Be able to automatically provision an entire environment — from “bare-metal” to running business services — completely from specification

- No direct management of individual boxes

- Be able to revert to a “previously known good” state at any time

- It’s easier to re-provision than it is to repair

- Anyone on your team with minimal domain specific knowledge can deploy or update an environment

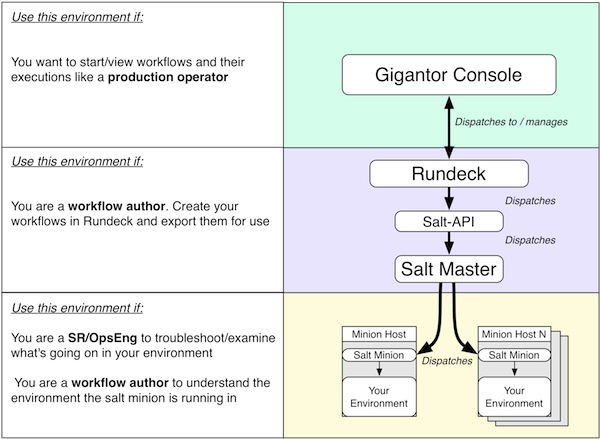

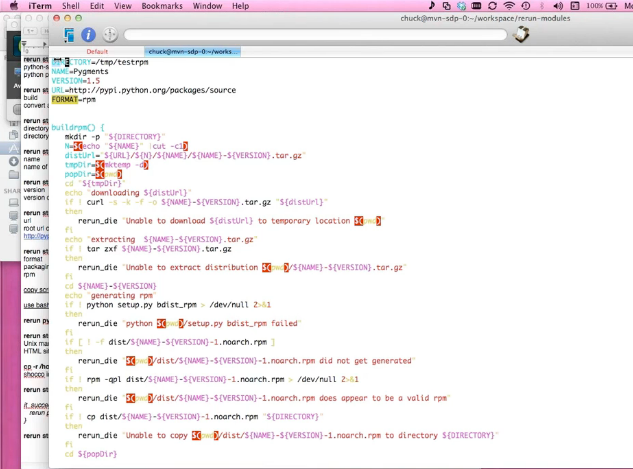

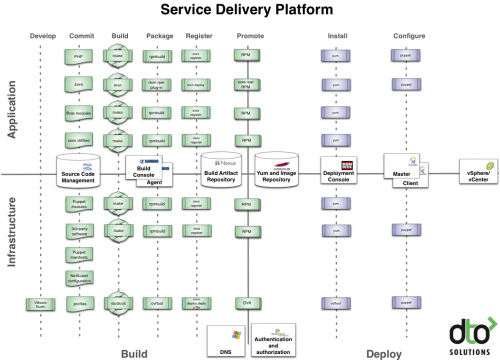

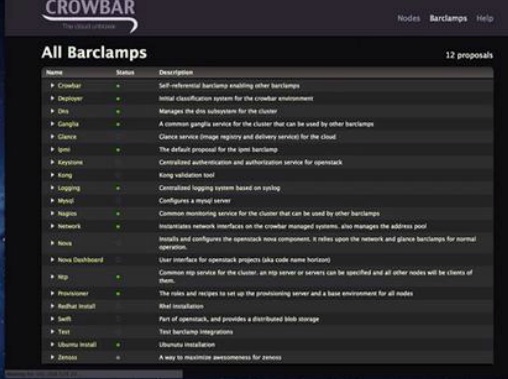

The representation of the open source toolchain has been updated and currently looks like this:

The new column on the left was added to describe the kind of actions that takes place at the corresponding layer. The middle column shows each layer of the toolchain. In the right column are examples of existing tools.

There are some other areas that are currently being discussed:

1. Where does application package management fall?

This is an interesting debate. Some people feel that all package distribution and management (system and application packages) should take place at the system configuration management layer. Others think that it’s appropriate for the system configuration management layer to handle system packages and the application service deployment layer to handle application and content packages.

2. How important is consistency across lifecycle?

It’s difficult to argue against consistency, but how far back into the lifecycle should the fully automated provisioning system reach? All Staging/QA environments? All integrated development environments? Individual developer’s systems? It’s a good rule of thumb to deal with non-functional requirements as early in the lifecycle as possible, but that imposes an overhead that must be dealt with.

3. Language debate

With a toolchain you are going to have different tools with varying methods of configuration. What kind of overhead are you adding because of differing languages or configuration syntax? Does individual bias towards a particular language or syntax come into play? Is it easier to bend (or some would say abuse) one tool to do most of everything rather than use a toolchain that lets each tool do what its supposed to be good at?

4. New case study

I’m working on adding additional case studies. If anyone has a good example of any part of the toolchain in action, let me know.

<p>The question you raise: How important is consistency across lifecycle?</p>

<p>HUGE</p>

<p>imagine if instead of all these people writing administration solutions, instead started going after the root cause… systems originating from the 1970’s that have fundamentally not changed… what gives. If a movement were to start like this, in a few years, that would become the new standard, and suddenly polishing a turd would not be what people are striving for</p>

<p>

If we start at the final deployment of which the consumer will use, and then work our way backwards in the life cycle, the further each environment is from the end-system introduces risk. The earlier we can eliminate risk, the better our effectiveness and efficiency becomes. One of the most common reasons this problem is difficult to solve is due to the way product is developed. Generally speaking our industry has architects of technology, we rarely have architects of product. My experiences at a few start-ups in silicon valley has been… development (I work in development)… throws technology over the wall and IT/OPS figures out how to productize it. That model is hugely broken, I’ve not see a team using this approach deliver predictability or on schedule.

</p>

<p>My primary focus is on building environments for development that result in deliverable products. This is easier in non-web environments, but should actually be triple simple in a web-environment. My approach is to create a scenario where we can provide the developer with 2 environments that can get as close as possible to the final product environment. On developers laptop I deploy VMware with a provisioned CentOS that as closely as possible approximates production. The next environment which you rarely see anymore (very common in OS development in the 80’s and early 90’s) is a pre-integration environment that attempts again to be as close as possible to final product (BTW — this is huge in improving quality hand-offs to QA). And finally Continous Integration (our industry mostly practices this in a very inefficient manner… when setup correctly with pre-integration, teams should go months without failure. When that occurs the tools, reporting and context switching changes)…</p>

<p>

Now here is the rub… most of the tools being produced are horrible at expressing the environment variants we need in development. The Java community has standardized on XML (every used ant… with for-loops, global immutable vars, its truly silly) Consider that we need to support both a development short-cycle (bread-n-butter where the developer is making incremental changes, builds and test), and the long-cycle (pre-integration). Consider that the development team needs to work with partners (protect IP), integration from partners (again IP proectection), outsource, consultants, demos, prototypes, compilation and linking variants, customer variants, platform and chipset variants.</p>

<p>Tools like cfengine, nagios, ant, maven, anything using XML, anything that is pure declarative fail miserably in expressing configurations in such a dynamic and variant based environment. These are very good tools, the problem they are trying to solve is hard. But in many cases the solutions are a heard of lemmings.</p>

<p>What might make a huge impact to solving this problem… select a vertical stack and from the ground up, re-engineer the stack to be a fully managed stack, where the ability to provision, monitor, configure is consider as a whole. For example, what if the engineering desktop was: Mac OS X, with VMware, running CentOS (target platform). What if on the target platform all the services startup scripts where rewritten so they provide configuration management apis, startup, shutdown , status, upgrade api… another words all services where written with the entire life cycle in mind)…</p>

<p>Before Linux, the uses had little control over what they received from vendors. There is no excuse for Linux to be an unmanaged system. It should inherently be a managed system. This means ripping out all the 1970’s and 1980’s stuff that came from AT&T and putting in 2010 thinking. System Administrators and Manager solution provides need to stop polishing turds, and turn Linux into a full manageable system</p>

I really like the paper. I’ve been working on a fully automated provisioning tool called FAI (Fully Automatic Installation) since 11 years. This tool started as a provisioning only tool, but evolved to become a configuration management tool. For config management the user can use shell, cfengine, perl or any other language which fits best. For FAI installing and configuring the OS or and application is mostly the same, this it always handles software packages. IMO it’s not always needed to distiguish between OS and application software. FAI is also the only tool (besides imaging deployment) which can handle different Linux distributions.

Maybe you wanna have a look at it:

http://www.informatik.uni-koeln.de/fai/

Hi Damon,

I'd recommend you to include FAI (http://www.informatik.uni-koeln.de/fai/) in yout White Paper among the options for OS Installation, its mature, has a lot of documentation and has integration CfEngine (http://www.cfengine.org/), a great System Configuration tool.

Great post BTW,

Alfredo

The FAI project has moved it's $HOME. The new URL of the web apges is

http://fai-project.org

The wiki is now reachable at

http://wiki.fai-project.org