Integrating DevOps tools into a Service Delivery Platform (VIDEO)

Damon Edwards /

The ecosystem of open source DevOps-friendly tools has experienced explosive growth in the past few years. There are so many great tools out there that finding the right one for a particular use case has become quite easy.

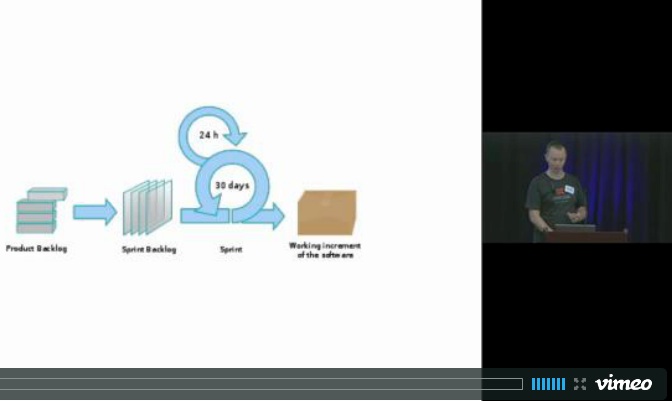

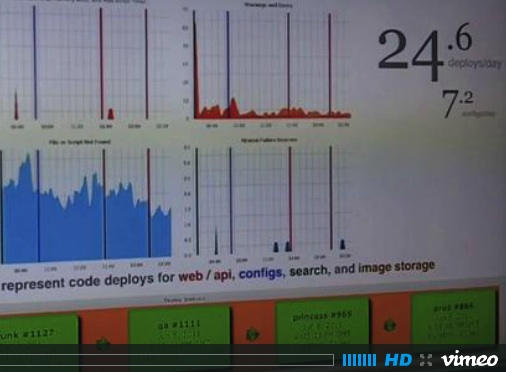

As the old problem of a lack of tooling fades into the distance, the new problem of tool integration is becoming more apprent. Deployment tools, configuration management tools, build tools, repository tools, monitoring tools — By design, most of the popular modern tools in our space are point solutions.

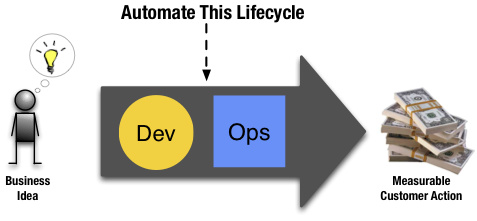

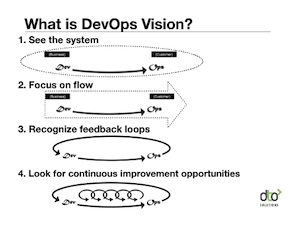

But DevOps problems are, by definition, fundamentally lifecycle problems. Getting from business idea to running features in a customer facing environment requires coordinating actions, artifacts, and knowledge across a variety of those point solution tools. If you are going to break down the problamatic silos and get through that lifecycle as rapidly and reliably as possible, you will need a way to integrate those point solutions tools.

The classic solution approach was for a single vendor to sell you a pre-integrated suite of tools. Today, these monolithic solutions have been largely rejected by the DevOps community in favor of a collection open source tools that can be swapped out as requirements change. Unfortunately, this also means that the burden of integration has fallen to the individual users. Even with the scriptable and API-driven nature of these modern open source tools, this isn’t a trivial task. Try as the industry might to standardize, every organization has varying requirements and makes varying technology decisions, thus making a once-size-fits-all implementation a practical impossibilty (which is also why the classic monolithic tool approach achieved, on averaged, mixed results at best).

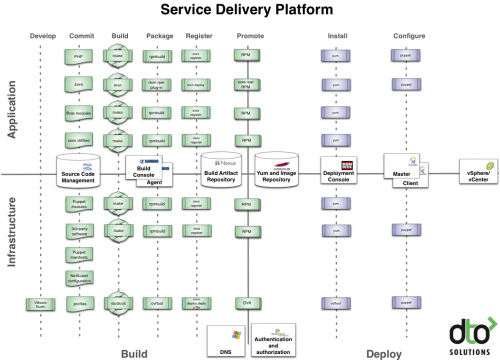

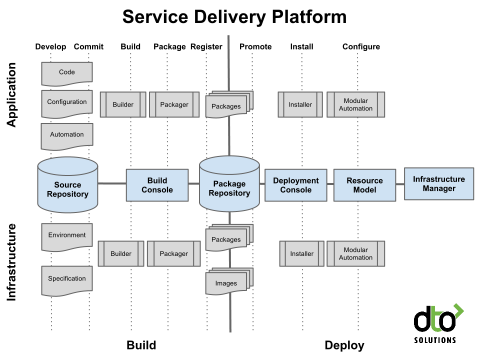

DTO Solutions has made a name for itself through helping it’s clients sort out requirements and build toolchains that integrate open source (and closed source) tools to automate the full Development to Operations lifecycle. Through that work, a series of design patterns and best practices have proven themselves to be useful and repeatable across a variety of sizes and types of companies and environments. These design patterns and best practices have over time become formalized into what DTO calls a “Service Delivery Platform”.

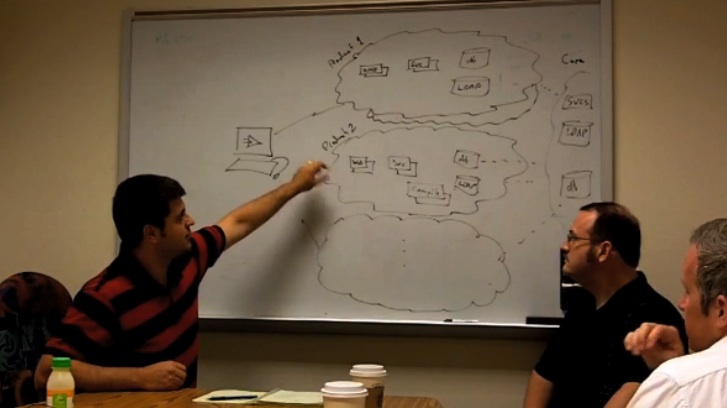

I recently sat down with my colleague at DTO Solutions, Anthony Shortland, to have him walk me through the Service Delivery Platform concept.

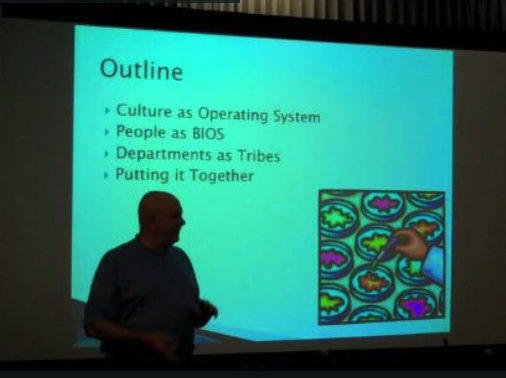

In this video, Anthony covers:

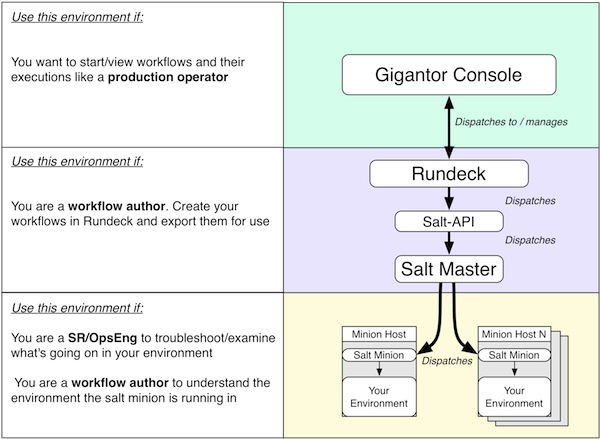

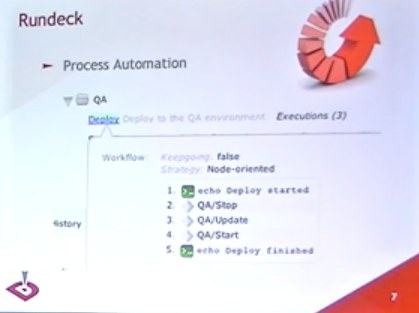

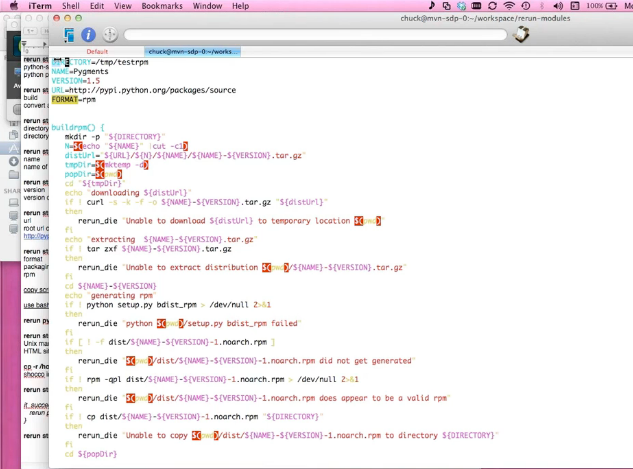

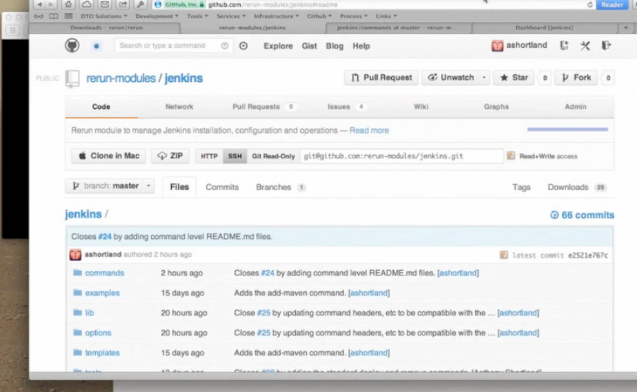

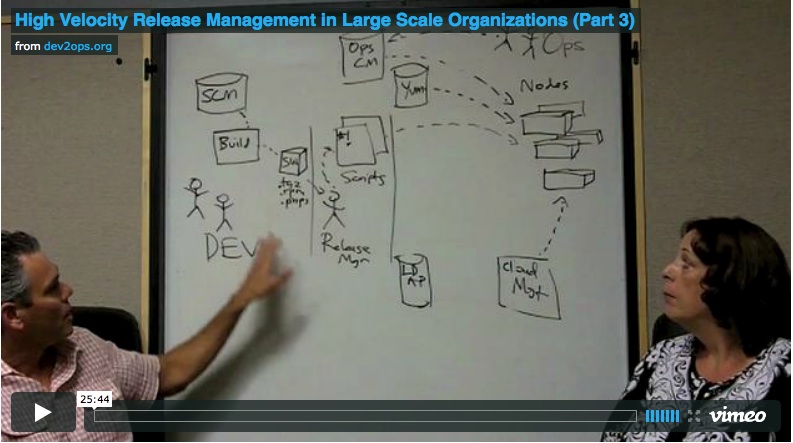

- The “quadrant” approach to thinking about the problem

- The elements of the service delivery platform

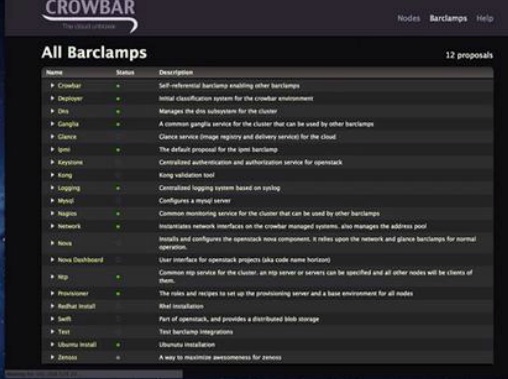

- The roles of various tools in the service delivery platform (with examples)

- The importance of integrating both infrastructure provisioning and application deployment (especially in Cloud environments)

- The standardized lifecycle for both infrastructure and applications

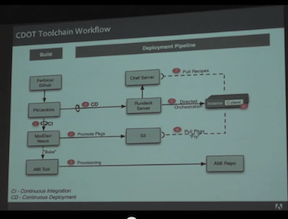

Below the video is a larger version of the generic diagram Anthony is explaining. Below that is an exmaple of a recent implementation of the design (along with the tool and process choices for that specific project).

[…] found Damon Edwards and Anthony Shortland’s video presentation on DevOps a refreshing change. They see DevOps as a larger, more comprehensive service delivery […]

This is an excellent model. However what is missing is a clear indication of where the testing is done. I presume that each phase ends with some kind of test that verifies that defects aren’t passed to the next step: pre-submit tests gate Commit; unit-tests gate Build; package verification gates Packaging, etc.

But where does User Acceptance Testing (UAT) fit in? Where does the testing for performance regressions fit in? I would do them after Register (because they should be done on the package) but before Promote (because it shouldn’t be promoted if UAT fails).

What do you recommend?