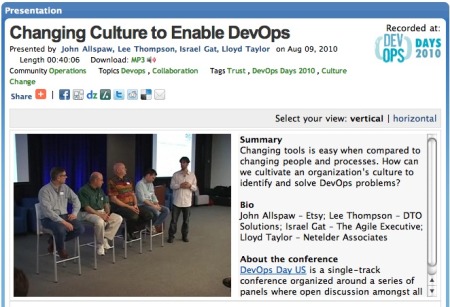

I recently caught up with Lee Thompson to discuss a variety of Dev to Ops topics including deployment, testing, and the conflict between dev and ops.

Lee recently left E*TRADE Financial where he was VP & Chief Technologist. Lee’s 13 years at E*TRADE saw two major boom and bust cycles and dramatic expansion in E*TRADE’s business.

Damon:

You’ve had large scale ops and dev roles… what lessons have you’ve learned the required the perspective of both roles?

Lee:

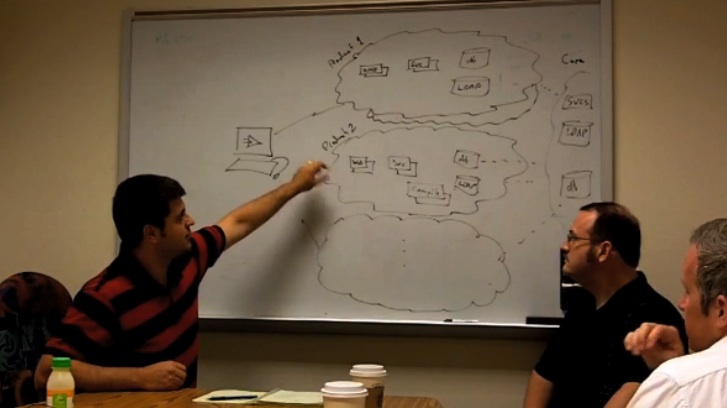

I was heavy into ops during the equity bubble of 1998 to 2000 and during that time we scaled E*TRADE from 40,000 trades a day to 350,000 trades a day. After going through that experience, all my software designs changed. Operability and deployability became large concerns for any kind of infrastructure I was designing. Your development and your architecture staff have to hand the application off to your production administrators so the architects can get some sleep. You don’t want your developers involved in running the site. You want them building the next business function for the company. The only way that is going to happen is to have the non-functional requirements –deployability, scalability, operability– already built into your design. So that experience taught me the importance of non-functional requirements in the design process.

Damon:

You use the phrase “wall of confusion” a lot… can you explain the nature of the dev and ops conflict?

Lee:

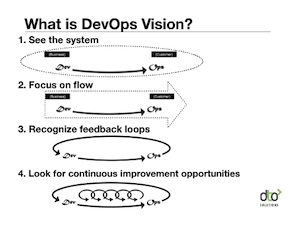

When dealing with a large distributed compute infrastructure you are going to have applications that are difficult to run. The operations and systems engineering staff who is trying to keep the business functions running is going to say “oh these developers don’t get it”. And then back over on the developer side they are going to say “oh these ops guys don’t get it”. It’s just a totally different mindset. The developers are all about changing things very quickly and the ops team is all about stability and reliability. One company, but two very different mindsets. Both want the company to succeed, but they just see different sides of the same coin. Change and stability are both essential to a company’s success.

Damon:

How can we break down the wall of confusion and resolve the dev and ops conflicts?

Lee:

The first step is being clear about non-functional requirements and avoid what I call “peek-a-boo” requirements.

Here’s a common “peek-a-boo” scenario:

Development (glowing with pride in the business functions they’ve produced): “Here’s the application”

Operations: “Hey, this doesn’t work”

Development: “What do you mean? It works just fine”

Operations: “Well it doesn’t run with our deployment stack”

Development: “What deployment stack?”

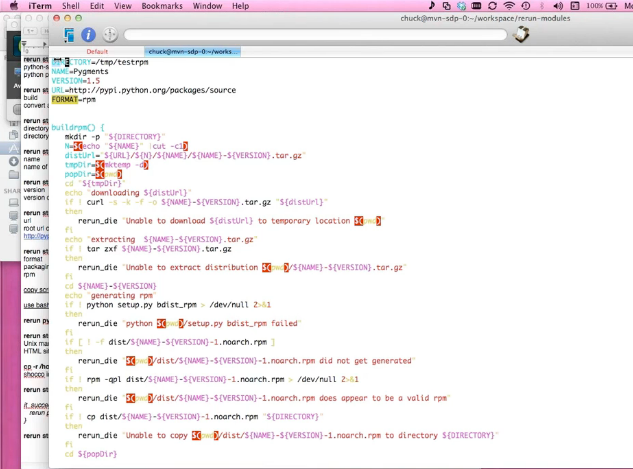

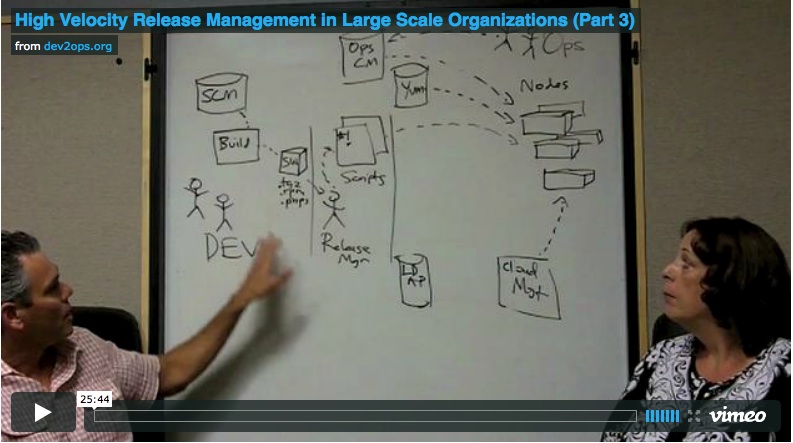

Operations: “The stuff we use to push all of the production infrastructure”

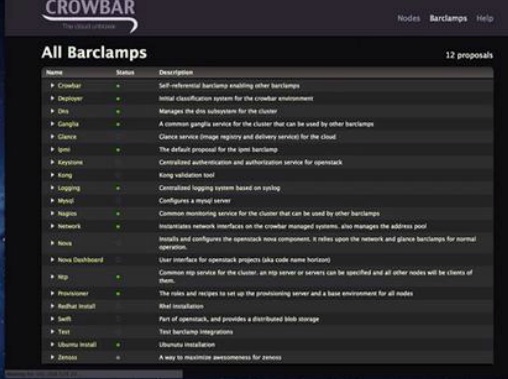

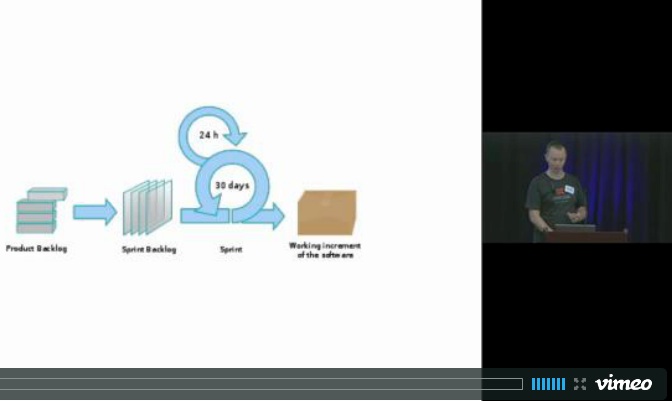

The non-functional requirements become late cycle “peek-a-boo” requirements when they aren’t addressed early in development. Late cycle requirements violates continuous integration and agile development principles. The production tooling and requirements have to be accounted for in the development environment but most enterprises don’t do that. Since the deployment requirements aren’t covered in dev, what ends up happening is that the operations staff receiving the application has to do innovation through necessity and they end up writing a number of tools that over time become bigger and bigger and more of a problem which Alex described last year in the Stone Axe post. Deployability is a business requirement and it needs to be accounted for in the development environment just like any other business requirement.

Damon:

Deployment seems to be topics of discussion that are increasing in popularity… why is that?

Lee:

Deployability and the other non-functional requirements have always been there, they were just often overlooked. You just made do. But a few things have happened.

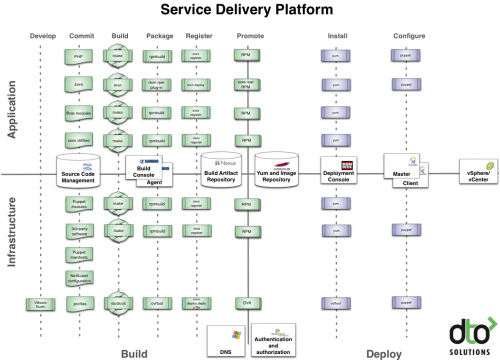

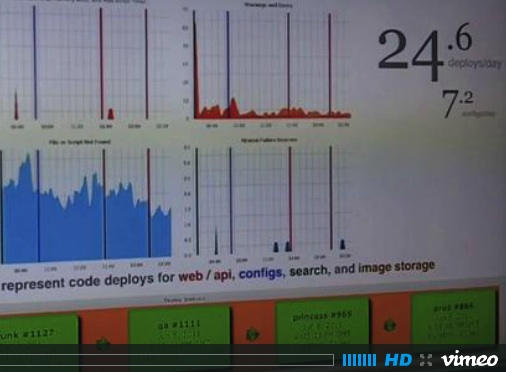

1. Complexity and commodity computing, both in the hardware and software, has meant that your deployment is getting to the point where automation is mandatory. When I started at E*TRADE there were 3 Solaris boxes. When I left the number of servers was orders and orders of magnitude larger (the actual number is proprietary). Since the operations staff can’t afford to log into every box, they end up writing tools because the developers didn’t give them any.

2. Composite applications, where applications are composed of other applications, mean that every application has to be deployed and scaled independently. Versioning further complicates matters. Before the Internet, in the PC and mainframe industries, you were used to delivering and maintaining multiple versions of software simultaneously. In the early days of the Internet, a lot of that went away and you only had two versions — the one deployed and the one about to be deployed. Now with the componentization of services and the mixing and matching of components you’ll find that typically you have several versions of a piece of infrastructure facing different business functions. So you might be running three or four independently deployed and managed versions of the same application component within your firewall.

3. Cloud computing takes both complexity and the need for deployability up another notch. Now you are looking to remote a portion of your infrastructure into the Internet. Almost everyone who is starting up a company right now is not talking about building a datacenter, they are all talking about pushing to the cloud. So deployability is very much at the forefront of their thinking about how to deliver their business functions. And the cloud story is only beginning. For example, what happens when you get a new generation of requirements like the ability to automate failover between cloud vendors?

Damon:

Testing is one of those things that everyone knows is good, but seems to rarely get adequately funded or properly executed. Why is that?

Lee:

Well, like many things it’s often simply a poor understanding of what goes into doing it right and an oversimplification of what the business value really is.

Just like deployment infrastructure, proper testing infrastructure is a distributed application in of itself. You have to coordinate the provisioning of a mocked up environment that mimics your production conditions and then boot up a distributed application that actually runs the tests. The level of thought and effort that has to go into properly doing that can’t be overlooked. Well, not if you are serious about delivering on quality of service.

While integration testing should be a very important piece of your infrastructure, the importance of antagonistic testing also can’t be overlooked. For example, the CEO is going to want to know what happens when you double the load on your business. The only way to really know that is to have a good facsimile of your business application mocked-up and those exact scenarios tested. That is a large scale application in of itself and takes investment.

Beyond service quality, there is business value in proper testing infrastructure that is often overlooked. When you start to build up a large quantity of high fidelity tests those tests actually represent knowledge about your business. Knowledge is always an asset to your business. It’s pretty clear that businesses who know a lot about themselves tend to do well and those who lack that knowledge tend not to be very durable.

Damon:

The culture of any large company is going to restrictive. Large financial institutions are, by their very nature, going to be more restrictive. Coming out of that culture, what are you most excited to do?

Lee:

Punditry, blogging and using social media to start! You really can’t do that from behind the firewall in the FI world. There are legitimate reasons for the restrictions, and I understand that. Because you have to contend with a lot of regulatory concerns, you just aren’t going to see a lot of financial institution technologist ranting online about what is going on behind the firewall. So I’m excited about becoming a producer of social media content rather than just a consumer.

I also find consulting exciting. It’s been fun getting out there and seeing a variety of companies and how similar their problems are to each other and to what I worked on at E*TRADE. It reminds me how advanced E*TRADE really is and what we had to contend with. I enjoy applying the lessons I’ve learned over my career to helping other companies avoid pitfalls and helping them position their IT organization for success.