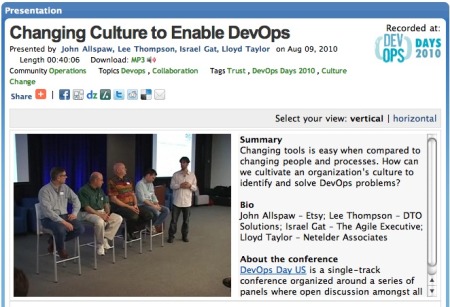

Q&A: Erik Troan on the role of version control in Operations

Damon Edwards /

“Just logging into a box and changing the software stack or its configuration is an idea whose time has passed.”

-Erik Troan

I recently spoke with Erik Troan about a topic he’s passionate about: bringing version control concepts and tools to IT Operations. Below is the lightly edited transcript of the highlights of the conversation.

Erik Troan is the Founder and CTO of rPath. Erik previously served in various roles at Red Hat including Vice President of Product Engineering, Senior Director of Marketing, and chief developer for Red Hat Software. You might also know him as one of the two original engineers who wrote RPM.

Erik Troan is the Founder and CTO of rPath. Erik previously served in various roles at Red Hat including Vice President of Product Engineering, Senior Director of Marketing, and chief developer for Red Hat Software. You might also know him as one of the two original engineers who wrote RPM.

Damon:

You were one of the original engineers who wrote RPM. Since your RPM days, how has your thinking about the role of version control in operations evolved?

Erik:

RPM was originally written to let two guys in an apartment build a Linux distribution without losing their minds. We really focused on the build side. Pristine source and patches were very important to us. The idea of sets of packages was not important to us. Probably the biggest shift as I started working with large companies and large deployments — originally at Red Hat and now with rPath — package management wasn’t about moving around individual packages, it was about installing a consistent set of software on machines and making sure those machines stayed consistent. So that’s where this idea of strong system version control came from.

It’s interesting to have a version of a package but it’s more interesting to be able to have a version of a deployed system. One helps the developers at a Linux distribution shop and the other helps systems administrators out in the wild. When you look at how RPM dependencies work, dependencies are solved against whatever packages happens to be newest (which can change tomorrow) while tools in the version control universe solve dependencies against a versioned repository with richer and finer grain dependency discovery and resolution. When you look at version control from that perspective, you get a sys admin tool rather than a developer tool.

Version control for system definitions is one piece of the scheme — you still have to have configuration, orchestration, and orchestration processes around that. But version control is a great underpinning to all of that because your orchestration tools and your configuration tools get smarter as you have consistent sets of software on client boxes and a consistent way to maintain those over time. It’s the difference between puppet making sure apache is installed and it making sure the right version of apache is installed along with the system components that apache has been validated against. You put puppet policies into a version control system; shouldn’t configuration versioning be matched by a version control system for provisioning software?

Damon:

From a modern software development point of view, keeping everything in version control is almost a forgone conclusion. However, in IT Operations the role of version control systems is fairly new and the use of version control systems is an underused strategy. Why do you think that is?

Erik:

I think that version control is a response to complexity. If you go back 30 years who would use version control for software development? The guys who built UNIX used it. People at IBM used it a little bit. But most people had a directory full of source code and they just lived with it. In the 80’s, source code became complicated. Projects became bigger. All of a sudden you had a whole bunch of fingers in the pot and things were changing all the time. You needed the ability to understand what was going on. To track what was going on. To do bisection when something broke – discover when it broke? Which patch broke it? All of this really just arose out of the complexity of source code development.

I think you are seeing the same thing happen in the IT arena. Again, if you go back to the 80’s, minicomputers had just come out and people only had three computers to maintain. That’s just not that hard. Then the 90’s come along and people had 25 or 30 Sun machines to maintain. In today’s world, people have tens of thousands of machines to maintain. I don’t talk to very many companies now who have fewer than 5,000 machines. They have them in cloud environments, datacenters, virtual environments — just the sheer complexity and scale of that is making version control an important part of the process. It lets you ask questions like “how is this machine configured today?”, “how is it going to be tomorrow?”, “how is it different than it was yesterday?”. It adds that time dimension to systems management so you understand where you are, how you got there, and where you are going next. That’s really what version control is all about. And then of course the ability to go backwards — if something breaks, how can I undo that and get back to something that worked?

Just logging into a box and changing the software stack or its configuration is an idea whose time has passed.

Damon:

What is the value of using version control in an operational environment from an individual’s point of view? What’s the value from an organizational point of view?

Erik:

One of the things I should emphasize is when we talk about version control we are really talking about a version control system — the whole system for how you do version control. You can think about it like CVS or Subversion. As an individual systems administrator, I can log into a box and see what version of what software is on the box, which you can do with something like RPM. But I should also be able to see what version of the entire manifest is on there. So instead just versioning individual packages, you’ve versioned a set of packages. If box #1 and box #2 are running the same manifest version, then I know that the boxes are probably the same. Having the version numbers of 1000 RPMs is information overload; simplifying that to a version number of the complete system manifest simplifies things and makes them comprehensible.

But you also want to do things like tell how a system is different from the version that is supposed to be on there. So you can go to the box and say show me how you are different from the manifest that is on this box? Or, there’s a new manifest — show me how this system differs from that new manifest. The other thing you can do is say that this box is broken — it was working yesterday but it is not working today — let me look to my version history and know exactly what has changed. What packages have changed? What configuration files have changed? Who made the changes? This box needs to be online right now so let me undo those changes — go back in time and put the box back to how it was. So this idea of being able to examine forward, move backwards, and having all of your configurations and everything else under a version control system is really a strong tool in a system admin’s quiver.

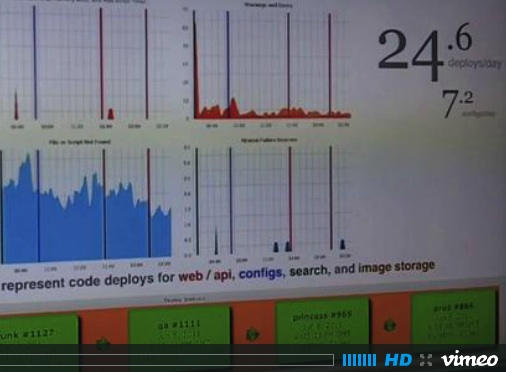

On the enterprise or departmental level, this really becomes valuable by enabling automation. The Visible Ops handbook certainly talks a lot about the idea of a Definitive Software Library, which is a versioned repository where all of your software comes from. The reason it does that is for all of the software artifacts that are going on machines in your infrastructure you want to know where they came from and how they got there. You want to be able to go back and say “I need to know everywhere this version of SSL is running because there is a security hole in the library”. Version control systems let me ask that kind of question. For automation, version control becomes critical. If you are deploying 1,000 machines you’re not deploying 1,000 individual machines. You’re deploying 1,000 cookie cutter machines. If they are all the same, then that definition ought to be in a version control system so you can view that definition over time and understand how it has changed — from yesterday to today to tomorrow. Not to mention deploy another 1000 next week that are exact replicas of the last 1000.

Version control systems also bring structure and predictability to the release lifecycle. Systems can be easily and consistently recreated as the system moves through the development, test and production lifecycle and it eliminates the risk of configuration drift as the system progresses through those stages.

Damon:

Like all ideas in technology, I’m sure there are those who will come out on the other side of this issue. What are some of the arguments you hear against the idea of advanced usage of version control in operations or objections as to why it won’t work in their specific situation?

Erik:

There’s really two arguments. One of which is “that’s not the way we do it now… what we do now is OK… it’s too hard to change and we just don’t want to do it”. I get that a lot — just that inertia — people don’t want to change their systems. The other argument you get is “every machine we have is different so we can’t make our machines the same… every artifact is different so why would you version control them?” My answer to that is if you have 1,000 machines in your business and they are all different then you are doing something extremely wrong. Your version control system can help you understand why they are different. It can represent why they are different. But it can also help you eliminate those differences.

And then there is also just the prioritization — “how much of a priority is this?” and “do we have other things we need to solve today?”. It’s unusual to talk to anyone who says that using version control to deploy systems is a bad idea. It’s just finding the people who feel like it’s problem that they have to solve right now because they have a compliance issue, or a repeatability issue, or an automation issue. In IT, it’s the problems that get fixed. You don’t do a lot of things without a problem to solve.

Damon:

How would you go to a company’s executive-level management and explain that they need to be dedicating resources to bringing strong version control practices to their operations?

Erik:

A lot of this comes down to cost control or reduction. Everywhere I look the number of servers under management is growing. Virtualization is putting four managed instances on each physical box. Cloud technologies are provisioning new instances in thirty seconds; each of those needs to be managed. Most shops try to hold a constant ratio of machines per sysadmin. If you let the number of instances grow by five times thanks to virtualization and cloud, are you planning on growing your IT team by five times? If not, you better automate everything you can see. Complete system version control makes system administrators more efficient.

There are two external drivers as well. One is risk; when systems are being hand assembled and configured — you’ll see a Puppet, a Chef, or a cfengine used in a pretty small percentage of enterprises — how do you know they are going to work tomorrow? if you lose an employee is that kind of knowledge that that person had about how a system is put together — and why it was put together — captured anywhere? Or is it just in their head? Version control systems capture that information.

Compliance, such as security compliance standards, is another great motivator. You can have external compliance with a standard like PCI or Sarbanes Oxley. Can you audit your systems to know that they are right? If all of your systems are hand assembled then you don’t even have a definition of what correct looks like. So what are you measuring against if people are just allowed to long into a box and change things willy nilly? For example, with PCI compliance — the standard you have to adhere to if you are going to hold onto credit card numbers for subscription billing — you are going to have auditors come in and ask “Where does this package come from?”, “Who put it there?”, “Why is it there?”, “How do you get updates on there?”, “How do you know which boxes need updates?”. Those are the kinds of questions that could be answered by a good version control system. But if you have hand assembled boxes then they are pretty much impossible to answer.