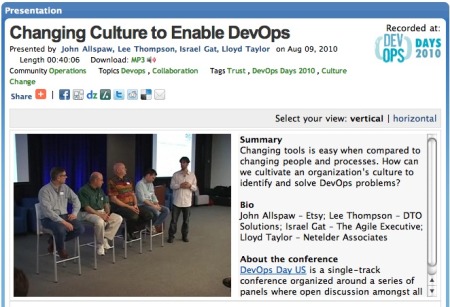

When you think about the multitude of companies that conduct business via software as a service, you’d expect to find a diverse range of underlying business models: CRM, e-tailing, social networking, advertising, etc. But if you’re not in the trenches with the dev2ops folks, you might be amazed at how diversely these companies manage their application environments and the variety of processes and mechanisms they employ.

Lots of research and mature best practice has developed in areas like security, availability and scale. Because these areas are more established and the problems better understood, one can rely on their proven methods and exploit their commodity solutions. There is much less agreement about how IT should technically manage software as a service environments, especially those that change often and are complex, multi-layer, multi-site online software systems.

Sure, there are established methods around scheduling and planning application change as evidenced by the various trouble ticket, bug trackers, and issue management tools out there. There are also emerging IT service management practices and paradigms from IT governance initiatives, such as ITIL. These tools and methodologies mostly focus on “people management” and are essential to coordinate the human activity and workflow. What is surprising, is the lack of consensus on “how” changes are technically performed and “what” is used to perform the changes.

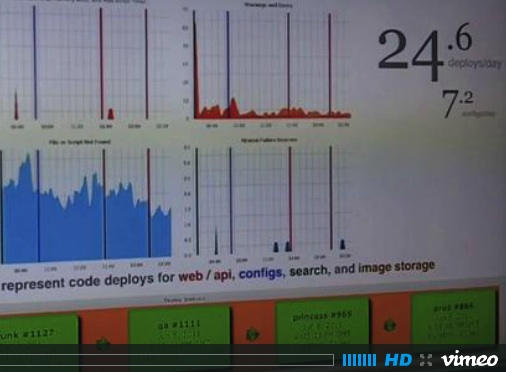

After all, the dev2ops folks in the trenches know it boils down to a series of technical steps: people and or scripted tools distribute various files, set environment configurations, import data, alter stored procedures, synchronize content repositories, check and or modify permissions, stop and start processes, etc., etc. It is at this level of change implementation, where such an astounding variety of tools and procedures can be witnessed.

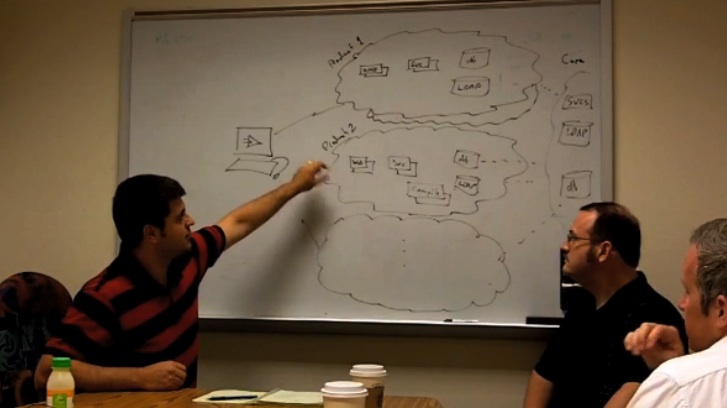

How and what implements change can even differ between groups within the same company. Developers may use developer tools to update running applications in test environments while operations uses a completely separate set of tools and procedures to maintain production environments. Even further, businesses that operate more than one application, might see this range of practices multiplied. The worst case, is one where there is a different process and set of tools for each combination of application, team, and environment.

Why do we see such a variety of approaches used to change application environments? Does each group need to invent their own procedures and toolsets? Are environments and applications so unique that there must be a customized and optimized solution for each case? Can one establish some basic approaches and paradigms that can be shared across organizational teams, even businesses?

To help answer these questions and better understand why folks choose from such a diverse array of approaches and tools, we here at dev2ops will conduct a series of polls. But, we need your help in the form of participation. We’ll come up with the first round of polls and rely on you to respond and post about your poll selections. You can also send survey ideas to polls@dev2ops.org and if that ground hasn’t already been covered, we’ll post those, too.

During the course of the polling, we’ll look for common patterns and pitfalls, some obvious to some folks while others novel to others. In the end, we can look for where there is common agreement and compare our own current practices to those.

So, look out for the polls and vote !