Nick Carr’s “The Big Switch”… the big dream?

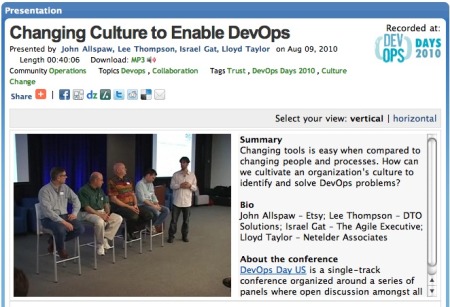

Damon Edwards /

Vinnie, The Deal Architect, has an interesting early review of The Big Switch the latest book from of Nick “IT Doesn’t Matter” Carr. The book sounds like an interesting read and I just ordered a copy from Amazon.

Vinnie has a great take on how the reality of utility computing just doesn’t match up with the dream the pundits are selling.

My comment on his blog sums up my $0.02 on the matter:

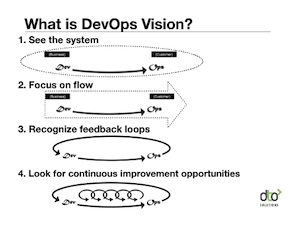

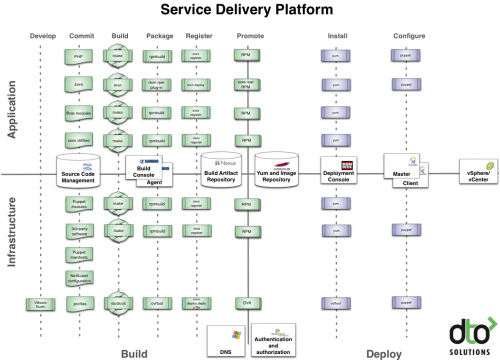

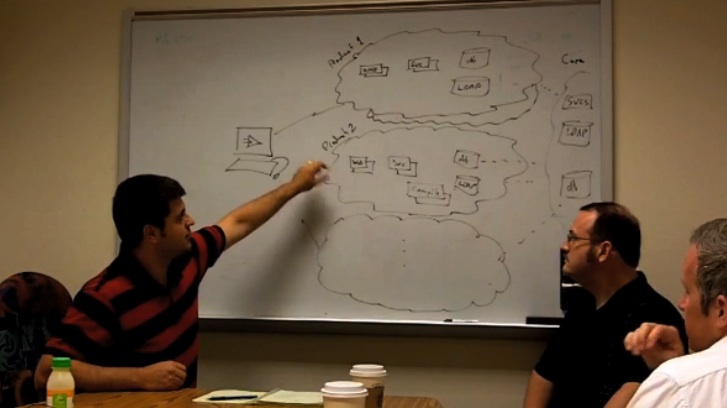

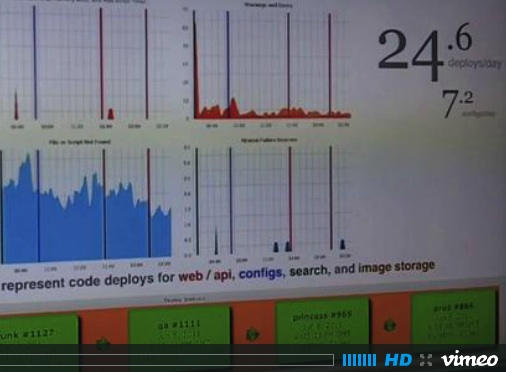

But I would add to your analysis that the root problem isn’t scale. The problem is that the business visions have jumped light years ahead of their internal capability to “deliver”. Simply put, the technical tooling and technical processes are woefully inadequate. Poke around in how the large outsourcers, managed services providers, or even large e-commerce and SaaS providers manage their infrastructure and applications and I think you’ll be shocked at how manual and ad-hoc things really are.

A good metaphor to use is manufacturing. The business minds behind the utility computing push are talking about things that are the equivalent to “mass customization” and “just in time delivery” while the technology and process model available to deliver those dreams is little more than the master craftsman and apprentice model of the pre-Ford Motors days (or maybe an early Ford assembly line, to be fair).

There are some interesting things under the radar in the open source community like ControlTier (plug) and Puppet, but the general interest in the problem space seems to be limited to the relatively limited pool of engineers who have tried to scale significant operations and know that a better way is out there. Unfortunately most of the technical fanfare in this area seems to be focused around “sexier” things like faster grid fabrics and hardware vendor wars. In general, automating and optimizing technical operations is a neglected field.

And for the time being, forget about help from the big 4 systems management vendors. Their state of the art is not much more than 15 year old Desktop/LAN management technology wrapped with a new marketing veneer.

So this is a problem that isn’t going away soon and is a real impediment to all who don’t have high profit margins or large pools of cheap labor to throw at the problem.

You’re right that the existing state-of-the-art with major outsourcers is woefully inadequate compared to Nick’s vision. However, IMHO you’re looking in the wrong place. They make their living off marking up labor, so they won’t willingly destroy that model. They’ll adopt some virtualization, and maybe even a image management system, but they’re not the power producers Nick writes about.

Evidence of the change is available, though. Google has spent billions developing new data centers in towns where staffing more than a dozen sys admins will be impossible. How do they run those data centers? Simple, they’ve developed technology specifically for that purpose, their own infrastructure system. Amazon, likewise, had to build their own infrastructure system, and now they’re trying to offer it to the public as EC2. We at 3tera have built such a system as well, and license it to hosting providers who offer utility services with it.

These three systems offer radically different approaches to solving the issue of delivering computing power as a utility, and that’s the point of this comment. The problems you mention are real, but they are also being addressed, and the resulting technology is in use delivering applications on the internet today.

@Bert:

Yes, there is a better way. Yes, some well funded players are doing it better, but that wasn’t my point. Isolated versions of a better mouse trap don’t move an industry forward. In fact, if you look at how some of the biggest players out there manage their operations you are going to find very different and very incompatible tools and processes. They hold “their way” like it’s their secret sauce. Compare this attitude to what you find in the world of auto manufacturing where common tooling and agreed upon processes are wide spread and the collective shared knowledge and discipline drives the entire industry forward. Parts manufacturers now provide whole plug-in systems, designers now “design for manufacturability”, contract manufacturers can excel in their speciality fields that never existed before. Because ideas like the Toyota Production System have become standard knowledge and practice across the industry, the rising tide has lifted all boats. Meet with a plant manager or supply chain architect from any auto company and they will recite to you the same base of tooling and process knowledge. Try that with any of the big tech players you mentioned.

I’m sure that your company does great work, just like I know ControlTier has been successful making e-commerce and SaaS venders more efficient and agile. The answers may seem clear to us, but it’s time we worry about the industry as a whole. The ideas we promote are not general knowledge and the tools we develop are not part of the average developer, release engineer, or sys admin’s toolbox. Until software operations and lifecycle automation is a respected and well studied public discipline, we aren’t going to see that happen.

As a side note… yes, Google has remote data centers but, along with Yahoo, and Microsoft (who are also building remote data centers), they have been hiring folks in the sys admin and operations field at an alarming rate the past couple of years. If you talk to small firms trying to complete for that talent they’ll tell you that they are finding the pool quite dry. I’m sure Google and company have figured out remote access 😉

Bert, yes that is way of future, but it has penetrated less than 1% of total corporate spend on sw and outsourcing. And we have a catch 22 – needs to happen but the bigger vendors cannot or will not move to the efficient utility model..

I appreciate your point, but it’s important to note that the auto industry didn’t arrive at the state you describe until later part of the last century. Henry Ford built a vertically integrated company, making tires, windshields, and even hospitals to keep his workforce productive. In his era this was a necessity. It took another 80 years of constant evolution and market pressures to achieve the supply chain you describe.

The supply chain, standards, and acceptability for utility computing will take several years to form as well. Consider networking for a moment. From the creation of Ethernet to the time almost everyone was connected to the internet took roughly 20 years. Along the way numerous standards were written, and a small fraction of those form the basis of the internet today. Numerous startups brought out innovative products, and a few survived to become the supply chain we enjoy today. Cisco connected everyone, Netscape gave us the tools to communicate, and Hotmail, Yahoo and Amazon gave us a reason to get online. Noticeably absent from that chain of events were IBM, DEC, Oracle, SAP and almost every other major IT vendor.

You’re right that today the big vendors have no real utility computing offering. However, numerous companies aren’t waiting. They’re enjoying utility computing today by launching or migrating applications with Amazon’s EC2 and The Gridlayer. Some of these applications are quite large, comprising hundreds of processors – larger than most corporate IT applications. As a result of using utility computing these companies enjoy true competitive advantage. They have no capital outlay for hardware, no fixed expenses, much lower labor overhead, no provisioning delays, plus they garner new capabilities. They can get started with extremely little expense, scale quickly and easily, and speed through QA by making hundreds of copies of applications. These innovators use the new technology because it gives them leverage.

Despite what you may be reading elsewhere, not all of these innovative user are small 😉