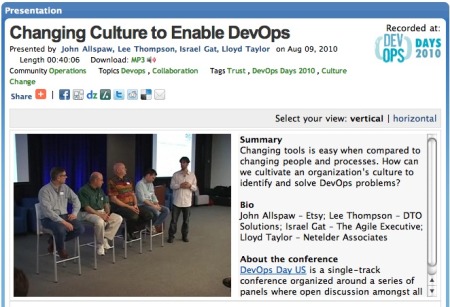

Q&A: Continuous Deployment is a reality at kaChing

Lee Thompson /

Update: KaChing is now called Wealthfront! Their excellent engineering blog is now http://eng.wealthfront.com/

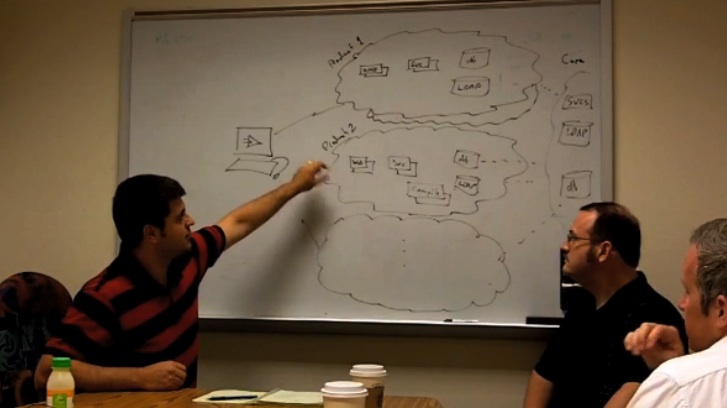

kaChing invited me over to their Palo Alto office last week and I sat down with Pascal-Louis Perez (VP of Engineering & CTO) and Eishay Smith (Director of Engineering) to talk shop.

I learned about continuous deployment, business immune systems, test randomization, external constraint protection, how code can be thought of as “inventory”, and that the ice cream parlor across the street from kaChing has great cherries! Below is a transcript of our chat.

Note: Tomorrow night (May 26) , kaChing will be presenting on Continuous Deployment at SDForum’s Software Architecture & Modeling SIG at LinkedIn’s campus!

|

|

| Pascal-Louis Perez VP of Engineering & CTO |

Eishay Smith Director of Engineering |

Lee:

Eric Ries, possibly Continuous Deployment’s biggest advocate, is a tech advisor for kaChing and he’s done a few startups himself! Eric mentions Continuous Deployment (CD) within a concept called the Lean Startup. It’s possibly the best business reason I’ve heard to do continuous deployment, giving you more iteration capability to get the product right in the customer’s perspective. You’re doing a startup with kaChing and blogged about your CD a few weeks ago. It’s great to be here today to learn a bit more about your CD implementation!

Pascal:

Yeah, and I think that Eric really made it popular at IMVU, which has probably one of the most famous continuous deployment system kind of 20-minute fully automated commit to production cycle. Very early on Eric and I started spending some time together. He was kaChing’s tech advisor almost from the start. He helped drive the vision of engineering and clearly conveyed why you would care about continuous deployment and why it’s such a natural step from agile planning to agile engineering. I think many people confuse agile planning with agile engineering. Agile engineering is the ability to ensure your code is correct quickly and achieve very quick iteration, like three minutes or five minutes. You commit; You know the system is okay.

Lee:

Before you forget about what you just changed.

Pascal:

Right. Exactly. So continuous deployment in that sense is just one more automation step that builds on top of testing practices, continuous testing, continuous build, very organized operational infrastructure, and then continuous deployment is kind of the cherry on top of the cake, but it requires a lot of methodology at every level.

Lee:

Testing, you talked about that a lot in the blog that testing is a major, major part of continuous deployment.

Eishay:

Without fast tests, and a lot of them, continuous deployment is very hard to achieve in practice.

Lee:

And unit versus integration testing both obviously I would think?

Eishay:

Yeah. We focused mostly on the unit. We test almost every part of our infrastructure, so integration tests are also important but not as much as unit tests.

Lee:

We were talking offline a little bit about the large number of tests and that you randomize the order in which they are ran.

Eishay:

We randomize them after every commit; And we have small commits.

Pascal:

And we’re trunk stable, so everybody develops on trunk and the software is stable at every point in time.

Lee:

And the unit tests are with the implementation?

Pascal:

Absolutely.

Lee:

And then the integration tests, or is that a separate package or application?

Pascal:

No, it’s all together. Some of the integration tests are part of the continuous build, and I think the other integral part to continuous deployment is what Eric Ries calls the immune system, which is basically automated monitoring and automated checking of the quality of the system at all times. One of the things in our continuous deployment system is we release one machine, we let it bake for some time, then we release two more, we let that bake for some time, and then we release four more.

Lee:

So the software is compatible with being co-deployed with new version versus old version?

Pascal:

Yeah. Forward/Backward compatibility is a must at every step.

Eishay:

We need to do self-tests of the services. One service starts and has a small self-test. It checks itself. If it fails its own self-test it means it’s not configured properly, it can’t talk with its peers or for any other reason, then it will rollback.

Lee:

So you have a bunch of assert statements that once the software boots it has these things that are critical for it to run and it checks it?

Eishay:

Right.

Pascal:

For instance, the portfolio management system starts. Can it get prices for Google, Apple, and IBM? If it can’t, it shouldn’t be out there.

Lee:

Yeah. If IBM changed symbol, which it hasn’t since it’s been public, but if it did then you’d have to go in there and change that assertion, but that you would know that almost immediately.

Pascal:

Yeah. We actually have – digressing a bit into the the realm of financial engineering – our own symbology numbering scheme to avoid those kind of dependencies on external references. Our own instrument ID. It’s kaChing’s instrument ID. Everything is referenced that way. At a high level, we really try from the start to protect ourselves from external constraints and having external conversions between the external domains and our view of the world at the boundary, at the outsests of the system so that within our system everything can be as consistent and as modern as we want. I’ve seen many systems where external constraints were impacting the core and making it very hard to iterate.

Lee:

Which makes for a tightly coupled system. So you’re trying to make the coupling looser between computing entities.

Pascal:

Yeah.

Lee:

There’s just a lot of stuff we’ve talked about just in three minutes. Two simple words: continuous deployment, and a lot of investment in technical capabilities underneath that. A lot of investment in build automation. A lot of investment in how the application is architected such that it can be co-deployed and co-resident with multiple versions. You have integration testing, unit testing, scale and capacity in running those tests a lot and randomizing, and then you went into monitoring, right? So I mean to do a continuous deployment system in an organization that is pretty large, that would probably be a pretty big transform, but since you guys are doing it right from the get-go, right from the start…

Pascal:

I think it would be extremely hard for an architecture, for a company culture that is not driven by test-driven development to then shift gears and decide “In one year we’re going to do continuous deployment. We need to hit all those milestones to get there.” It’s a very difficult company culture to shift. Everything in our engineering processes are geared towards having a system at every version, having multiple versions in production, having no down time, database upgrades that are always forward and backward compatible. It’s really ingrained in many things, and to be able to help engineers work at every level, to be able to achieve all the different little things that require seamless continuous implementation is quite hard, which is why obviously doing that from the start is much easier. I think it would be very hard to get a large project and shift it to doing CD.

Eishay:

TDD or test development is the fundamentals. It’s at the core. If continuous deployment is the cherry on the top, the TDD is the base. Without that you just can’t…

Pascal:

And something that one of our engineers, John Hitchings, commented about was our view of testing. He was saying, “At my previous company I would be doing testing to make sure I didn’t do any blatant mistake”, but at kaChing I do testing to make sure that I’ve really documented all of my feature and protecting my feature from people who are coming next week and changing it.

Lee:

Is that behavior driven development?

Pascal:

It’s really writing specs as tests. If I write a feature and then I go on vacation, anybody in the company should be able to go and change it. I need to be able to document it in code sufficiently well to enable my peers to come and change it in a safe way. The tests aren’t there to help me. The tests are there to protect my little silo of codeIt’s a very different approach on testing.

Eishay:

We don’t have any dark code areas in our system. Anyone can get into our system and do major re-factoring with the confidence that if the tests pass then it’s okay. Of course he has to test anything he adds, but I can be pretty confident that I can move classes from one place to the other or change behaviors and if the test passes it’s good. In other places I know in other companies I used to work at, there’s a lot of places that the developer will have a piece of code, left the company or is working on something else, and this part of code is locked. Nobody can touch it, and it’s very scary.

Lee:

Fragile. Yeah. Let’s talk about confidence. So I walked in, it looked like you kicked off a build and that was running. Do you think it’s probably already in production right now while we’re sitting in the conference room talking about it?

Eishay:

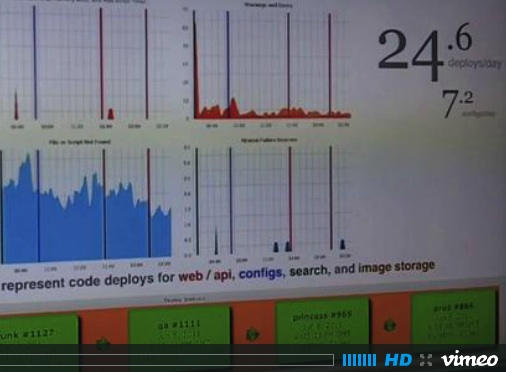

Oh yeah. We say five minutes, but in practice it’s probably more like four minutes since I commit something and it’s out there. It could be very typical that I do three or four commits, change 20 lines of codes here and there, and oh, it’s in production. It’s not uncommon that we have 20, 30 releases of services a day.

Lee:

That’s incredible. I think most organizations are happy with a week or every other week and you’re doing it 20 times a day. That’s something to really be proud of, and by the way, I was really glad to see David Fortunato’s blog. There’s a lot to be proud of. That’s just a great post.

Eishay:

Thank you.

Lee:

It’s really good.

Pascal:

We’re talking a lot about the technical aspects, which are a lot of fun, but at the business level we’ve been able to launch kaChing Pro from idea to having clients using it in one month, and kaChing Pro is essentially Google Analytics meets Salesforce for investment managers. It has full stats. It allows the manager to have a kind of CRM system to manage all of his clientele, private store front for them to be able to on board the new clients and basically a little bank interface where their customers can come in, log in, and see their brokerage statements, trade. Being able to turn the gears so quickly on a product is really key.

Lee:

Which is the key part of the lean startup idea that Eric Ries was bringing up. The business value that the continuous deployment does, that was the best written documentation of why to do this. I’ve been thinking about continuous deployment type principles from a technical perspective to help engineers do their job. Lean Startup documents why to do CD from a business perspective. I’m glad you brought that up. That’s a really good point.

Eishay:

For instance, one of our customers contacted Jonathan, one of our business guys, and told us that something didn’t make sense in our workflow and it felt like we needed to change it now. He called the customers after 15 minutes or half an hour and asked them how it looks like right now. We can immediately change things and deploy them and check how the market reacts or the customers react.

Lee:

The way I’ve usually seen this done is that the customer has a discussion with a business person and a developer, and the developer runs a prototype next version and shows it to the customer and the customer likes it and then it takes four weeks or longer to get it into the production system. The way you’re doing it where it’s checked in, looks good to you, you put it out there and the customer is going on to a live site and seeing it.

Eishay:

Sometimes we have “experiments”, which is a term coined by Google. We’ll test full features with a select group, just like you would A/B test parts of the site… except it is for full features!

Pascal:

I’ll give you an example. I think some of the misunderstandings of pushing code to production is pushing code is equivalent to a release, when really those two things are completely disconnected. Pushing code is, well, I have inventory in my subversion. I need to get that inventory out in the store in front of customers, versus releases, unveiling a new aisle. The aisle can be there in the store, it’s just not going to yield.

So what we’ll very often do is we’ll have the next generation of our website but you need to have a little code to be able to see it, or your user needs to be put in a specific experiment and we showed that website to you, very similar to Google’s homepage being shown to 2% of its user base. You basically have the two versions of the website running at the same time and just showing it selectively. So we can, before a PR launch, have only the reporters look at the live website on KaChing.com with all of the new hype, but everybody else doesn’t see it and then when the marketing person is happy they just flip the switch and it goes public.

Lee:

Is that on separate servers and separate application or is it the same server?

Pascal:

No, same server. It’s just selectively decides user 23, you’re in that experiment. Here, I’ll show you that website.

Lee:

There’s a book called Visible Ops and it says that 80 percent of your problems in operations are changes. This can cause a conflict between your operations staff and your development staff because the change is met with great suspect. Maybe resistance, but it’s definitely going to be a point of scrutiny. Testing is probably the biggest thing that gives you the confidence that the change isn’t going to kill you. And if it did fail, you are missing a test.

Eishay:

And we’re not this type of company. We don’t have ops operations.

Lee:

I would argue you’re ops, but yeah. [Laughs]

Eishay:

We also don’t have a standard QA. Since we deploy all the time, there’s no person that looks at the code after every point.

Lee:

I think you built it – the console that you showed me functions as QA. QA runs the test and signs off on the results and you’re basically automating that function.

Pascal:

QA is classically associated with two functions. There’s making sure you’ve built per spec, specs being human readable, only humans can do that part. But then once the spec is fully understood and disambiguated then that part can be automated. I think many people kind of mix the two and don’t automate QA. You should clearly separate the two in attaining a spec that is fully understood and then making sure this is fully automated. Then you can have humans do the interesting thing, like the product manager saying, “This flow does look like what I had in my mind”, and that viewpoint was not encoded into a test. So this part will never be able to automate because from a human brain, it needs to be understood by a human brain.

Lee:

What I think I’ve learned today is in order to do continuous release and continuous deployment you have to have continuous testing. It sounds to me like if you don’t have that you’re not gonna have the confidence.

Eishay:

It drives a fundamental culture of thinking, engineering culture; which means that the engineer who writes the code, he knows that there’s no second tier of QA persons who will check that the small feature change is now good. He has to know that he must write all the tests himself to fully cover any feature change in places. At places that have formal QA, I’ve seen people change something they didn’t fully test because they know someone will later on have a second look at this feature or this change.

The first feature when it’s released you need to have a person, probably the product manager, to look at it and see if it’s right, but afterwards they’ll never look at it again ‘cause they’ll assume the engineer wrote the features. Of course the software changes all the time because we re-factor, we extract services to another machine. We do all sorts of stuff, but having no other person to do the QA makes us as the engineers do better testing, and better tests.

Great interview, good stuff, totally see that this is the way to go.

Good job guys.

I would be very interested in the kaChing definition of unit testing. I know it could lead to a void discussion but I heard different approaches to this subject 😉

Lee: Extremely interesting interview. Thanks for sharing it. Friends of mine are starting a new online bank in the States. Definitely going to point them to this post and in your general direction. I see some potential synergy there. Ping me the next time you are passing through Paris or via twitter. http://www.twitter.com/aainslie

Lee, very interesting interview. I particularly liked the analogy of code as "inventory" and the comparison of a release to an "aisle".

Push the release and deployment down into the development lifecycle; make it automated and testable. Don’t leave it as an afterthought in a far away system. Great read!