Deployment management design patterns for DevOps

Alex Honor /

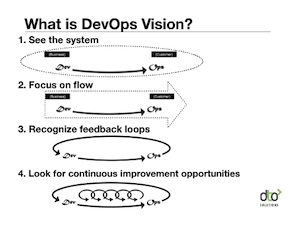

If you are an application developer you are probably accustomed to drawing from established design patterns. A system of design pattern can play the role of a playbook offering solutions based on combining complimentary approaches. Awareness of design anti-patterns can also be helpful in avoiding future problems arising from typical pitfalls. Ideally, design patterns can be composed together to form new solutions. Patterns can also provide an effective vocabulary for architects, developers and administrators to discuss problems and weigh possible solutions.

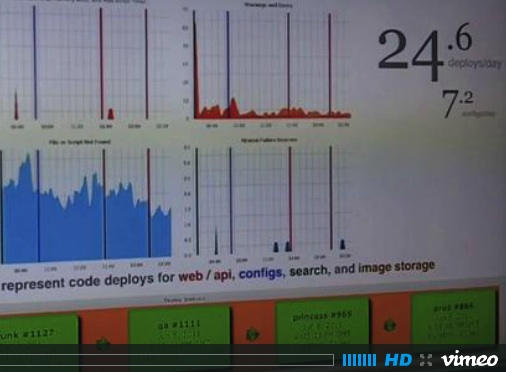

It’s a topic I have discussed before, but what happens once the application code is completed and must run in integrated operational environments? For companies that run their business over the web, the act of deploying, configuring, and operating applications is arguably as important as writing the software itself. If an organization cannot efficiently and reliably deploy and operate the software, it won’t matter how good the application software is.

But where are the design patterns embodying best practices for managing software operations? Where is the catalog of design patterns for managing software deployments? What is needed is a set of design patterns for managing the operation of a software system in the large. Design patterns like these would be useful to those that automate any of these tasks and will facilitate those tools developers who have adopted the “infrastructure as code” philosophy.

So what are typical design problems in the world of software operation?

The challenges faced by software operations groups include:

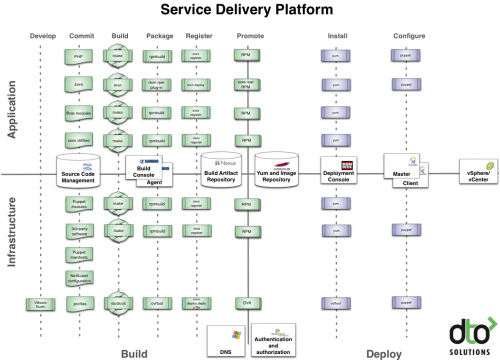

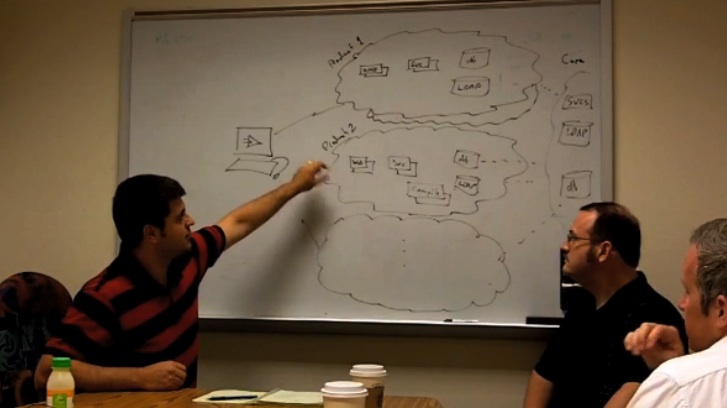

- Application deployments are complex: they are based on technologies from different vendors, are spread out over numerous machines in multiple environments, use different architectures, arranged in different topologies.

- Management interfaces are inconsistent: every application component and supporting piece of infrastructure has a different way of being managed. This includes both how components are controlled and how they are configured.

- Administrative management is hard to scale: As the layers of software components increase, so does the difficulty to coordinate actions across them. This is especially difficult when the same application can be setup to run in a minimal footprint while another can be designed to support massive load.

- Infrastructure size differences: Software deployments must run in different sized environments. Infrastructure used for early integration testing is smaller than those supporting production. Infrastructure based on virtualization platforms also introduces the possibility of environments that can be re-scaled based on capacity needs.

Facing these challenges first hand, I have evolved a set of deployment management design patterns using a “divide and conquer” strategy. This strategy helps identify minimal domain-specific solutions (i.e., the patterns) and how to combine them in different contexts (i.e., using the patterns systematically). The set of design patterns also include anti-patterns. I call the system of design patterns “PAGODA”. The name is really not important but as an acronym it can mean:

- PAtterns GOod-for Deployment Administration

- PAckaGe-Oriented Deployment Administration

- Patterns for Application and General Operation for Deployment Administrators

- Patterns for Applications, Operations, and Deployment Administration

Pagoda as an acronym might be a bit of a stretch but the image of a pagoda just strikes me a as a picture of how the set of patterns can be combined to form a layered structure.

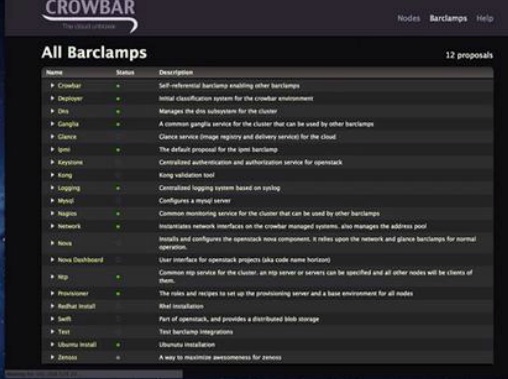

Here is a diagram of the set of design patterns arranged by how they interrelate.

The diagram style is inspired by a great reference book, Release It. You can see the anti patterns are colored red while the design patterns that mitigate them are in green.

Here is a brief description of each design pattern:

| Pattern | Description | Mitigates | Alternative names |

|---|---|---|---|

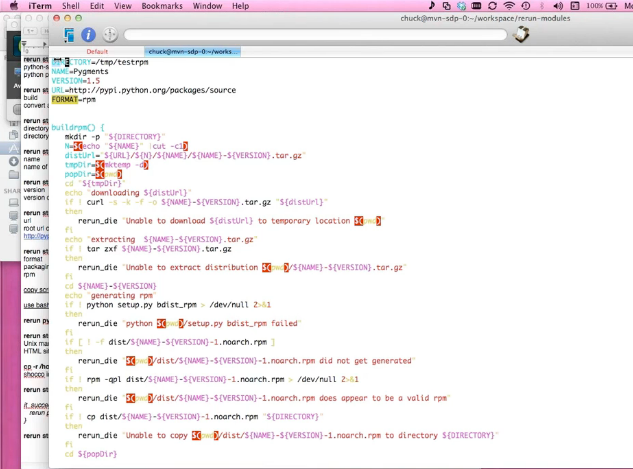

| Command Dispatcher | A mechanism used to lookup and execute logically organized named procedures within a data context permitting environment abstraction within the implementations. | Too Many Tools | Command Framework |

| Lifecycle | A formalized series of operational stages through which resources comprising application software systems must pass. | Control Hairball | Alternative names |

| Orchestrator | Encapsulates a multi-step activity that spans a set of administrative steps and or other process workflows. | Control Hairball, Too Many Cooks | Process Workflow, Control Mediator, |

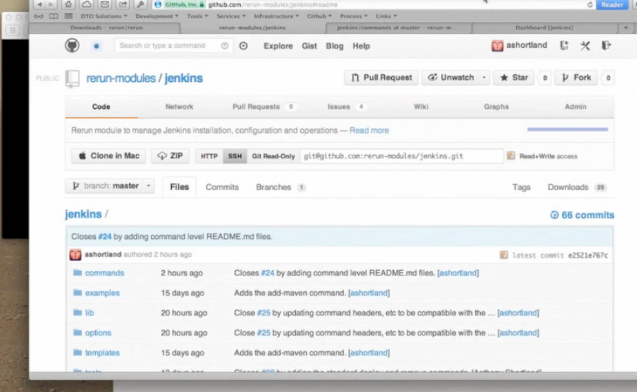

| Composable Service | A set of independent deployments that can assembled together to support new patterns of integrated software systems. | Monolithic Environment | Composable Deployments |

| Adaptive Deployment | Practice of using an environment-independent abstraction along with a set of template-based automation, that customizes software and configuration at deployment time. | Control Hairball, Configuration Bird Nest, Unmet Integration | Environment Adaption |

| Code-Data Split | Practice of separating the executable files (the product) away from the environment-specific deployment files, such as configuration and data files that facilitates product upgrade and co-resident deployments. | Service Monolith | Software-Instance Split |

| Packaged Artifact | A structured archive of files used for distributing any software release during the deployment process. | Adhoc Release | Alternative names |

The anti-patterns might be more interesting since they represent practices that have definite disadvantages:

| Anti-Pattern | Description | Mitigates | Alternative names |

|---|---|---|---|

| Too Many Tools | Each technology and process activity needs its own tool, resulting in a multitude of syntaxes and semantics that must each be understood by the operator, and makes automation across them difficult to achieve. | Command Dispatcher | Tool Mishmash, Heterogeneous interfaces |

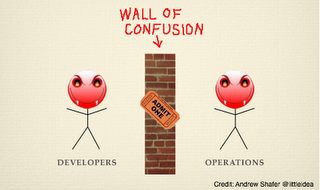

| Too Many Cooks | A common infrastructure must be maintained by various disciplines but each use their own tools to affect change increasing chances for conflicts and overall negative effects. | Control Mediator | Unmediated Action |

| Control Hairball | A process that spans activities that occur across various tools and locations in the network, is implemented in a single piece of code for convenience but turns out to be very inflexible, opaque and hard to maintain and modify. | Control Mediator, Adaptive Deployment, Workflow | |

| Configuration Bird Nest | A network of circuitous indirections used to manage configuration and seem to intertwine like a labyrinth of straw in a bird nest. People often construct a bird nest in order to provide a consistent location for an external dependency. | Environment Adaptation | |

| Service Monolith | Complex integrated software systems end up being maintained as a single opaque mass with no-one understanding entirely how it was put together, or what elements it is comprised, and how they interact. | Code-Data Split, Composable Service | House Of Cards, Monolithic Environment |

| Adhoc Release | The lack of standard practice and distribution mechanisms for releasing application changes. | Packaged Artifact |

Of course, this isn’t the absolute set of deployment management patterns. No doubt you might have discovered and developed your own. It is useful to identify and catalog them so they can be shared with others that will face scenarios already examined and resolved. Perhaps this set offered here will spurn a greater effort.