Use the word “process” and confusion ensues

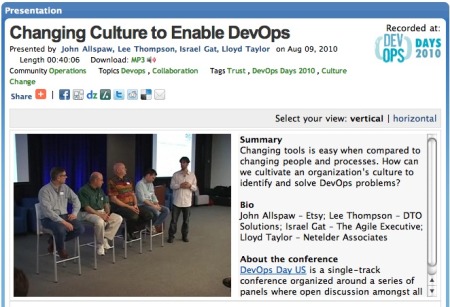

Damon Edwards /

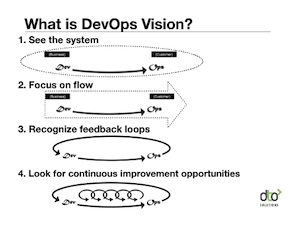

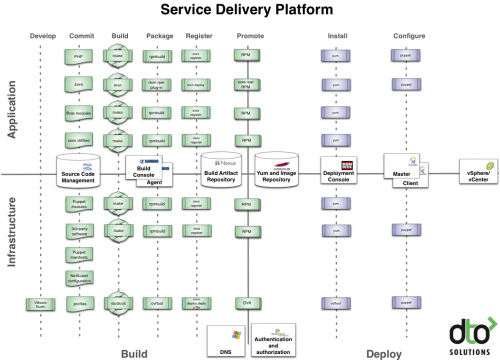

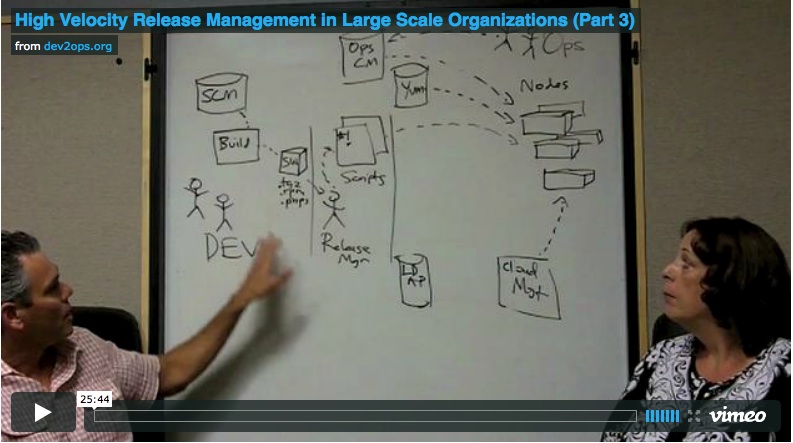

In my last post about the River of News for Monitoring concept, process played a central role. In various conversations I’ve had since writing that post, it’s become clear to me that the word “process” is a tricky and overloaded word. There are lots of processes whirling through an enterprise. There are business processes (customer transactions, supplier transactions, human resources, finance, etc.). There are application processes (pretty self-explanatory). Then there are IT processes. My view of IT processes is that they are the actions that transform your IT assets and their related environments. (Note: obviously you could use an ITIL-like definition born from a standards body at this point, but I’m looking for a simple set of buckets to use without causing further debate)

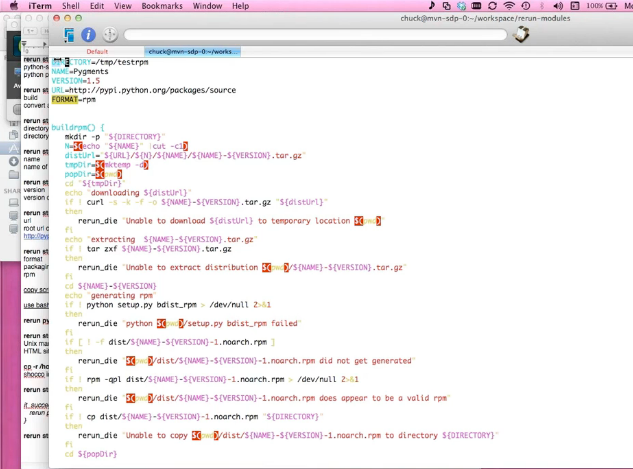

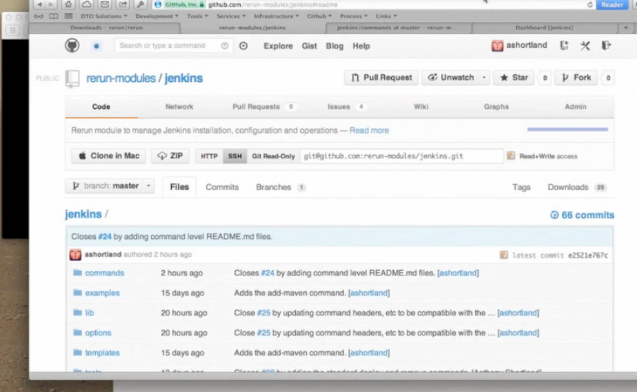

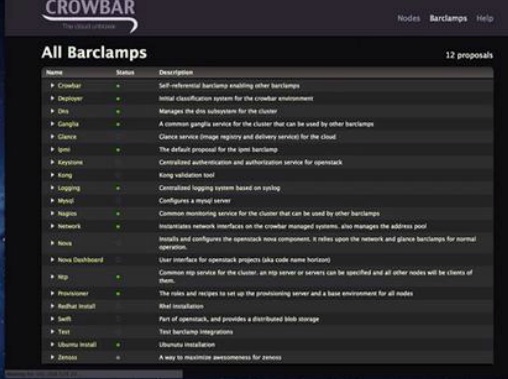

There are plenty of tools that track and examine business processes. There are plenty of tools that track and examine application processes. However, when it comes to IT process, the available tooling is quite thin. Sure there are tools (e.g. ticketing systems, bug trackers, approval workflows, etc.) that track the HUMAN aspect of your IT processes, but they give you very little visibility into what actually took place at the system or application level. To make matters worse, under their fancy dashboards, most of these systems they rely almost entirely on a human to tell them what was done or observed. In today’s complex and highly distributed environments, it’s almost impossible to get an accurate picture of what really took place using these faulty or outdated techniques.

Skeptical? Give the status quo a test. Walk into any sizable IT operations and ask them to do 2 things:

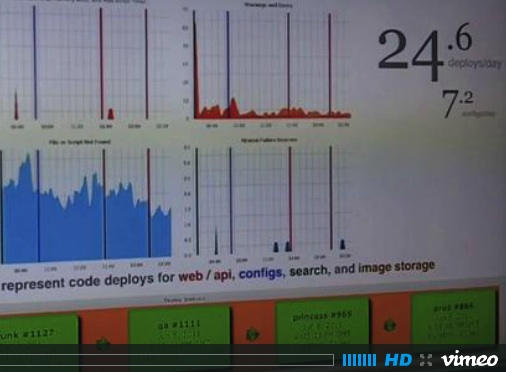

1. Show you all of the deployment activity, with the context that those activities occurred in, that took place in [pick some slice of their environment] between [pick two arbitrary points in time]. This doesn’t mean things like “Bob said he completed the steps of this process” or “Jane said she ran this set of scripts”. I’m referring to evidence of the actual technical activities that took place across the boxes.

2. Show you the entire lifecycle of [pick an arbitrary application package], from when it was built and packaged to all of its deployments throughout Dev, QA, and Prod environments.

I would be shocked if they had this information readily available. In most cases, they couldn’t produce this information at all. For many companies these systems are their source of revenue generation, their “factory floor” if you will, and they can’t answer these simple questions. In any other industry this would be wholly unacceptable.

This is the situation we are working on changing.