Update 1: Wikipedia now has a pretty good DevOps page

Update 2: Follow-up posts on the business problems that DevOps solves and the competitive business advantage that DevOps can provide.

If you are interested in IT management — and web operations in particular — you might have recently heard the term “DevOps” being tossed around. The #DevOps tag pops up regularly on Twitter. DevOps meetups and DevOpsDays conferences, are gaining steam.

DevOps is, in many ways, an umbrella concept that refers to anything that smoothes out the interaction between development and operations. However, the ideas behind DevOps run much deeper than that.

What is DevOps all about?

DevOps is a response to the growing awareness that there is a disconnect between what is traditionally considered development activity and what is traditionally considered operations activity. This disconnect often manifests itself as conflict and inefficiency.

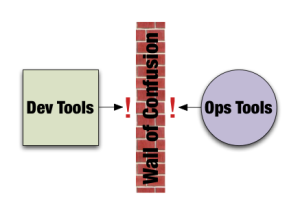

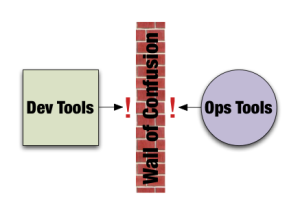

As Lee Thompson and Andrew Shafer like to put it, there is a “Wall of Confusion” between development and operations. This “Wall” is caused by a combination of conflicting motivations, processes, and tooling.

Development-centric folks tend to come from a mindset where change is the thing that they are paid to accomplish. The business depends on them to respond to changing needs. Because of this relationship, they are often incentivized to create as much change as possible.

Operations folks tend to come from a mindset where change is the enemy. The business depends on them to keep the lights on and deliver the services that make the business money today. Operations is motivated to resist change as it undermines stability and reliability. How many times have we heard the statistic that 80% of all downtime is due to those self-inflicted wounds known as changes?

Both development and operations fundamentally see the world, and their respective roles in it, differently. Each believe that they are doing the right thing for the business… and in isolation they are both correct!

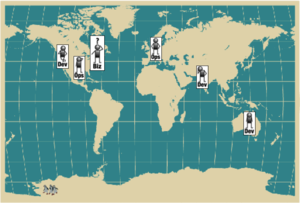

To make matters worse, development and operations teams tend to fall into different parts of a company’s organizational structure (often with different managers and competing corporate politics) and often work at different geographic locations.

Adding to the Wall of Confusion is the all too common mismatch in development and operations tooling. Take a look at the popular tools that developers request and use on a daily basis. Then take a look at the popular tools that systems administrators request and use on a daily basis. With a few notable exceptions, like bug trackers and maybe SCM, it’s doubtful you’ll see much interest in using each others tools or significant integration between them. Even if there is some overlap in types of tools, often the implementations will be different in each group.

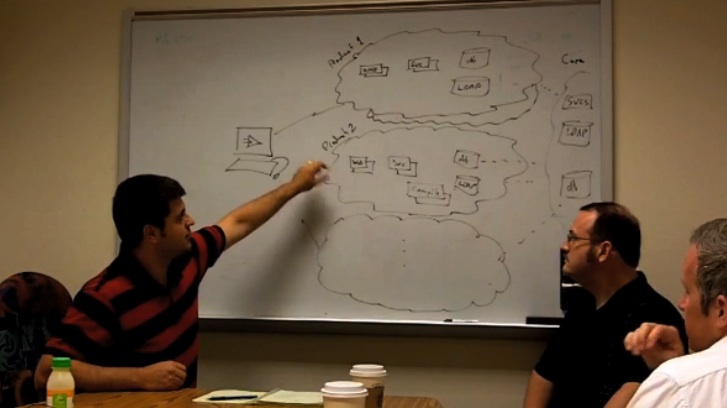

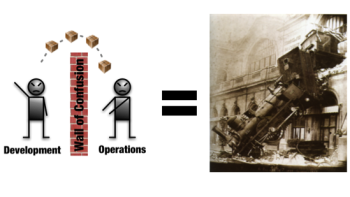

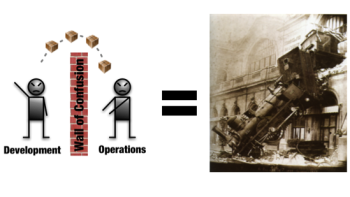

Nowhere is the Wall of Confusion more obvious than when it comes time for application changes to be pushed from development operations. Some organizations will call it a “release” some call it a “deployment”, but one thing they can all agree on is that trouble is likely to ensue. The following scenario is generalized, but if you’ve ever played a part in this process it should ring true.

Development kicks things off by “tossing” a software release “over the wall” to Operations. Operations picks up the release artifacts and begins preparing for their deployment. Operations manually hacks the deployment scripts provided by the developers or creates their own scripts. They also hand edit configuration files to reflect the production environment, which is significantly different than the Development or QA environments. At best they are duplicating work that was already done in previous environments, at worst they are about to introduce or uncover new bugs.

Operations then embarks on what they understand to be the currently correct deployment process, which at this point is essentially being performed for the first time due to the script, configuration, process, and environment differences between Development and Operations. Of course, somewhere along the way a problem occurs and the developers are called in to help troubleshoot. Operations claims that Development gave them faulty artifacts. Developers respond by pointing out that it worked just fine in their environments, so it must be the case that Operations did something wrong. Developers are having a difficult time even diagnosing the problem because the configuration, file locations, and procedure used to get into this state is different then what they expect (if security policies even allow them to access the production servers!).

Time is running out on the change window and, of course, there isn’t a reliable way to roll the environment back to a previously known good state. So what should have been an eventless deployment ended up being an all-hands-on-deck fire drill where a lot of trial and error finally hacked the production environment into a usable state.

While deployment is the most obvious pain point, it is only one part of the need for DevOps. As John Allspaw points out, the need for cooperation between development and operations starts well before and continues long after deployment.

What’s the benefit of DevOps?

DevOps is a powerful idea because it resonates on so many different levels.

From the perspective of individuals toiling in hands-on development or operational roles, DevOps points towards a life that is free from the source of so many of their hassles. It’s by no means a magical panacea, but if you can make DevOps work you are removing barriers that are both a significant time-sink and a source of morale killing frustration. It’s a simple calculation to make: invest in making DevOps a reality and we all should be more efficient, increasingly nimble, and less frustrated. Some may argue that DevOps is a lofty or even farfetched goal, but it’s difficult to argue that you shouldn’t try.

For the business, DevOps contributes directly to enabling two powerful and strategic business qualities, “business agility” and “IT alignment”. These may not be terms that the troops in the IT trenches worry about on a daily basis, but they should definitely get the attention of the executives who approve the budgets and sign the checks.

A simple definition of IT alignment is “a desired state in which a business organization is able to use information technology (IT) effectively to achieve business objectives — typically improved financial performance or marketplace competitiveness” [source].

DevOps helps to enable IT alignment by aligning development and operations roles and processes in the context of shared business objectives. Both development and operations need to understand that they are part of a unified business process. DevOps thinking ensures that individual decisions and actions strive to support and improve that unified business process, regardless of organizational structure.

A simple definition of agility in a business context is the “ability of an organization to rapidly adapt to market and environmental changes in productive and cost-effective ways” [source].

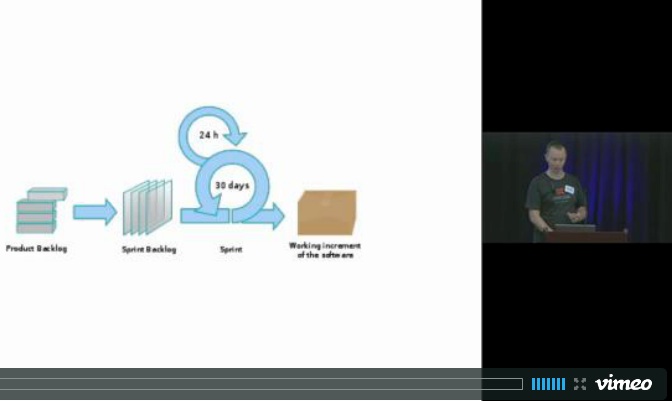

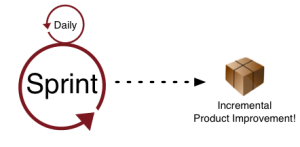

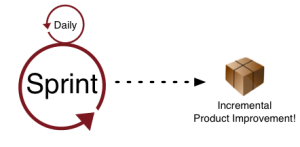

Of course, developers also have their own specialized meaning of the word “agile“, but the goals are very similar. Agile development methodologies are designed to keep software development efforts aligned with customer/company goals and produce high quality software despite changing requirements. For most organizations, Scrum, the iterative project management methodology, is the face of Agile.

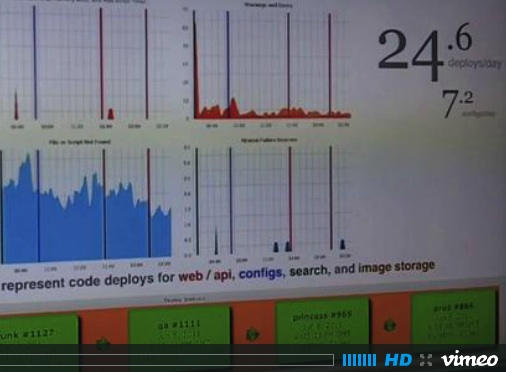

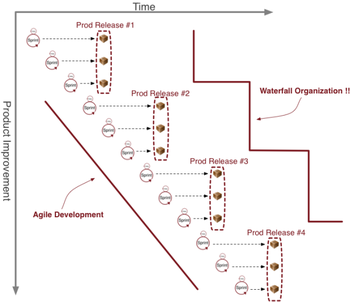

Agile promises close interaction and fast feedback between the business stakeholders making the decisions and the developers acting on those decisions. If you look at the output of a well functioning Agile development group you should see a steady stream improvement that is in tune with business needs.

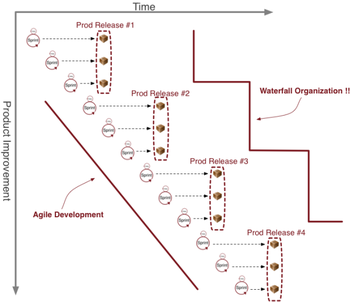

However, when you step back and look at the entire development-to-operations lifecycle from an enterprise point of view, that Agile stream and it’s associated benefits are often obscured. The Wall of Confusion leads to a dissociation of the application lifecycle. Development works at one pace and Operations works at another. The long intervals between production deployments, in effect, turn the Agile efforts of an organization right back into the waterfall lifecycle it was trying to avoid. No matter how Agile the development organization is, it’s exceedingly difficult to change the slow and lumbering nature of a business while the Wall of Confusion is in place. Andrew Rendell has a great post that tells the anecdotal story of how an organization’s cumbersome release processes turn their agile development efforts right back into a waterfall.

DevOps enables the benefits of Agile development to be felt at the organizational level. DevOps does this by allowing for fast and responsive, yet stable, operations that can be kept in sync with the pace of innovation coming out of the development process.

If you are seeking to establish a DevOps project within your organization, be sure to keep the terms “IT alignment” and “business agility” in mind.

How do we bring DevOps to life?

Like most emerging topics, it’s easier to find a consensus about the problem than it is about the solution.

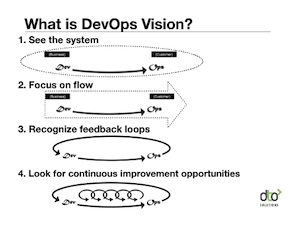

If you listen to the current DevOps conversations, there does appear to be 3 areas of focus for DevOps related solutions:

1. Measurement and incentives to change culture – Changing culture and reward systems is never easy. However, if you don’t change your organization’s culture, fulfilling the promise of DevOps will be difficult, if not impossible. When looking to influence culture in a business organization, you need to pay close attention to how you measure and judge performance. What you measure influences and incentivizes behavior. All parties across the development-to-operations lifecycle need to understand their stake in the larger business process of which they are a part. The success of both individuals and groups needs to be measured within the context of the success of the entire development-to-operations lifecycle. For many organizations this is a shift from more of a siloed approach to performance measurement, where each group measures and judges performance based on what matters to that specific group. This previous post I wrote dives deeper into the process for getting the correct end-to-end view of measurement into place.

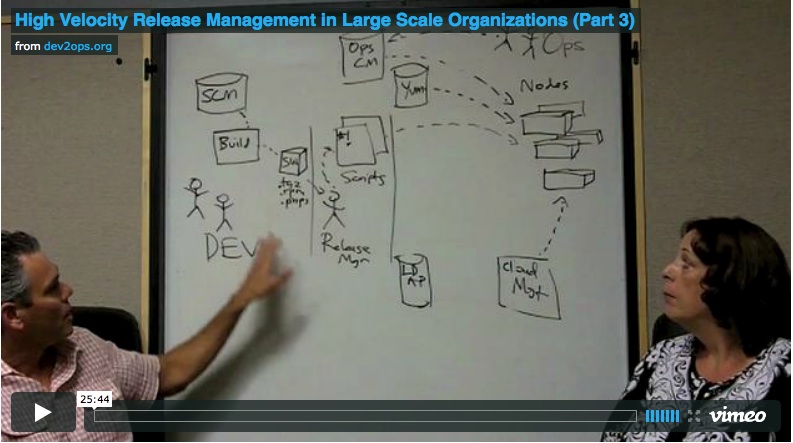

2. Unified processes – The important theme of DevOps is that the entire development-to-operations lifecycle must be viewed as one end-to-end process. Individual methodologies can be followed for individual segments of that processes (such as Agile on one end and Visible Ops on the other), so long as those processes can be plugged together to form a unified process (and, in turn, be managed from that unified point-of-view). Much like the question of measurement and incentives, each organization will have slightly different requirements for achieving that unified process. Here is an excellent post by Six Sigma Blackbelt Ray Riescher on his experience bridging Scrum and ITIL.

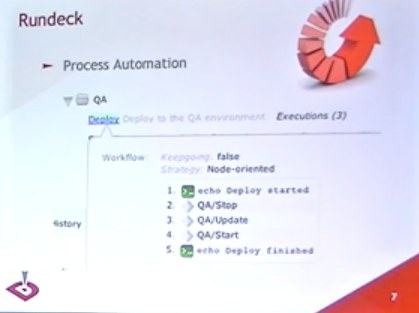

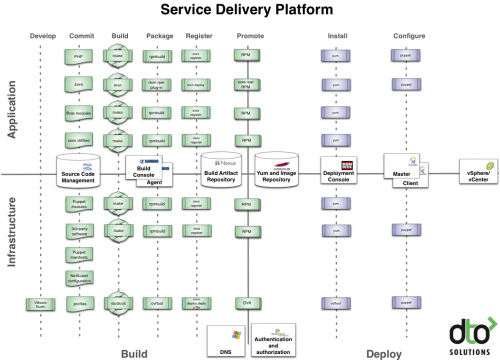

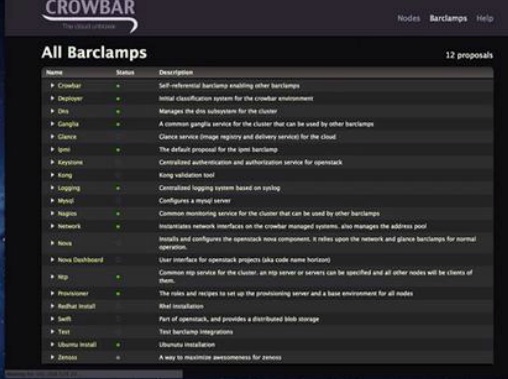

3. Unified tooling – This is the area in which most of the DevOps discussion has been focused. This isn’t surprising since it seems to be the natural reflex of technologists, for better or for worse, to jump straight into tooling discussions when looking to solve a problem. If you follow the communities of tools like Puppet, Chef, or ControlTier then you are probably already aware of the significant focus on bridging development and operations tooling. “Infrastructure as code”, “model driven automation”, and “continuous deployment” are all concepts that would fall under the DevOps banner. Alex Honor wrote a good post about some of the design patterns that toolsmiths working on DevOps tools need to worry about.

Jake Sorofman does a great job with the following overview of what types of tooling is required to make DevOps a reality:

A version-controlled software library—which ensures all system artifacts are well defined, consistently shared, and up to date across the release lifecycle. Development and QA organizations draw from the same platform version, and production groups deploy the exact same version that has been certified by QA.

Deeply modeled systems—where a versioned system manifest describes all of the components, policies and dependencies related to a software system, making it simple to reproduce a system on demand or to introduce change without conflicts.

Automation of manual tasks—taking the manual effort out of processes like dependency discovery and resolution, system construction, provisioning, update and rollback. Automation—not hoards of people—becomes the basis for command and control of high-velocity, conflict-free and massive-scale system administration.

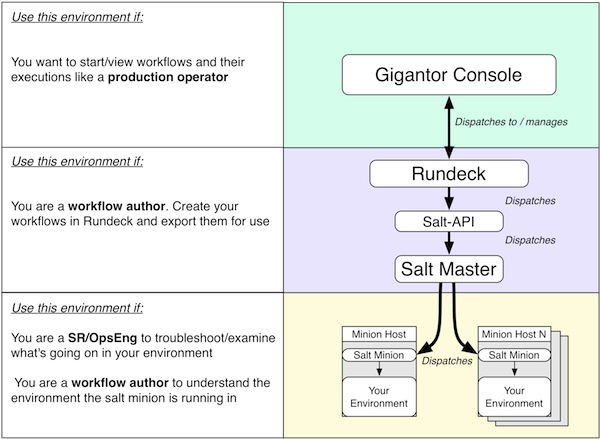

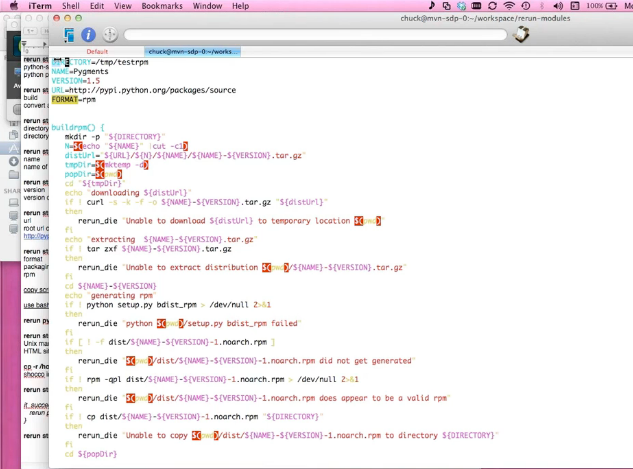

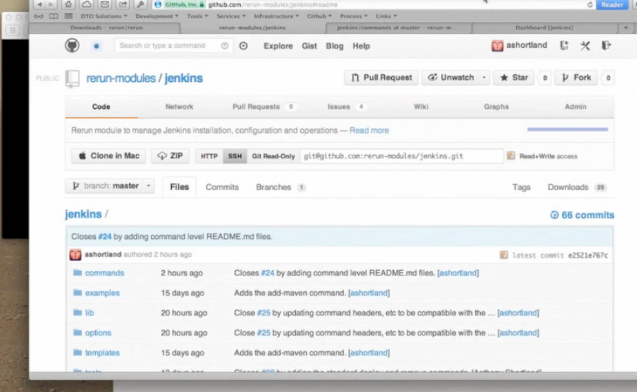

It’s essential that all individual tools be considered part of a larger toolchain that spans the entire Development to Operations lifecycle (even if tight technical integration isn’t a option). Tool choice and implementation decisions (on both the toolchain and individual tool levels) need to be made in the context of their impact on that end-to-end lifecycle. If you are wondering how that is done, take a look at this example of an open source fully automated provisioning toolchain that can be plugged into a larger Development to Operations toolchain.

What DevOps is not!

At the recent OpsCamp Austin, Adam Jacob from OpsCode/Chef railed against the idea that some system administrators were now seeking to change their job title to “DevOps”. I have to admit that, at the time, I was a bit skeptical that this was actually happening. However, I have since witnessed people on multiple occasions expressing this desire to rewrite job titles or establish DevOps as some sort of new role to be filled.

For example, Stephen Nelson-Smith wrote an excellent post about DevOps. While I agree with almost everything he said, I have to strongly disagree with the idea that DevOps should be a unique position or job title.

Turning “DevOps” into a new job title or special role sets a dangerous precedent. This makes DevOps someone else’s problem. You’re a DBA? Don’t worry about DevOps, that’s the DevOps team’s problem. You’re a security expert? Don’t worry about DevOps, that’s the DevOps team’s problem.

Think of it this way. You wouldn’t say “I need to hire an Agile” or “I need to hire a Scrum” or “I need to hire an ITIL” would you? No, you would just say I need to hire developers, project managers, testers, or systems administrators who understand these concepts and methodologies. DevOps is no different.

Why the name “DevOps”?

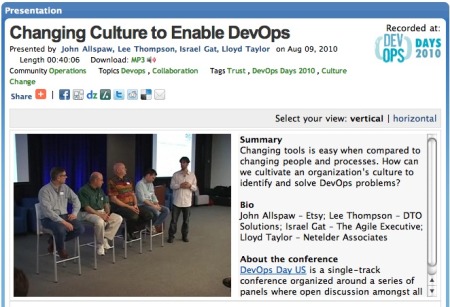

Probably because it’s catchy. It’s also a good mental image of the concept at the widest scale — when you bring Dev and Ops together you get DevOps. There has been other terms for this idea, such as Agile Operations, Agile Infrastructure, and Dev2Ops (a term we’ve been using on this blog since 2007). There is also plenty of examples of people arriving at the idea of DevOps on their own, without calling it “DevOps”. For an excellent example of this, read this recent post by Ernest Mueller or watch John Allspaw and John Hammond’s seminal presentation “10+ Deploys Per Day: Dev and Ops Cooperation at Flickr” from Velocity 2009.

For better or for worse, DevOps seems to be the name that is catching peoples’ imaginations. I credit the efforts of Patrick Dubois for championing the term “DevOps”, bringing the first DevOps Days conference to a (successful) reality, and maintaining the devops.info site.

Be sure to join in the DevOps conversation at the upcoming DevOps Day USA conference on June 25, 2010 in Mountain View, CA. It’s the day after O’Reilly’s Velocity 2010 conference, so be sure to hit both!